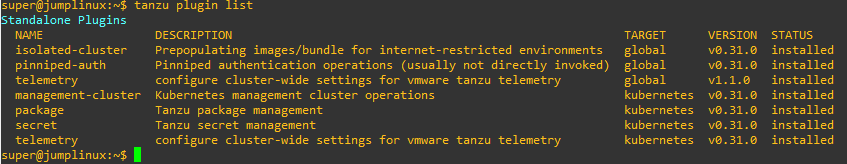

In previous articles we have seen how to create a standalone TKG cluster using NSX ALB as a loadbalancer, let’s see how to install and configure it for TKG.

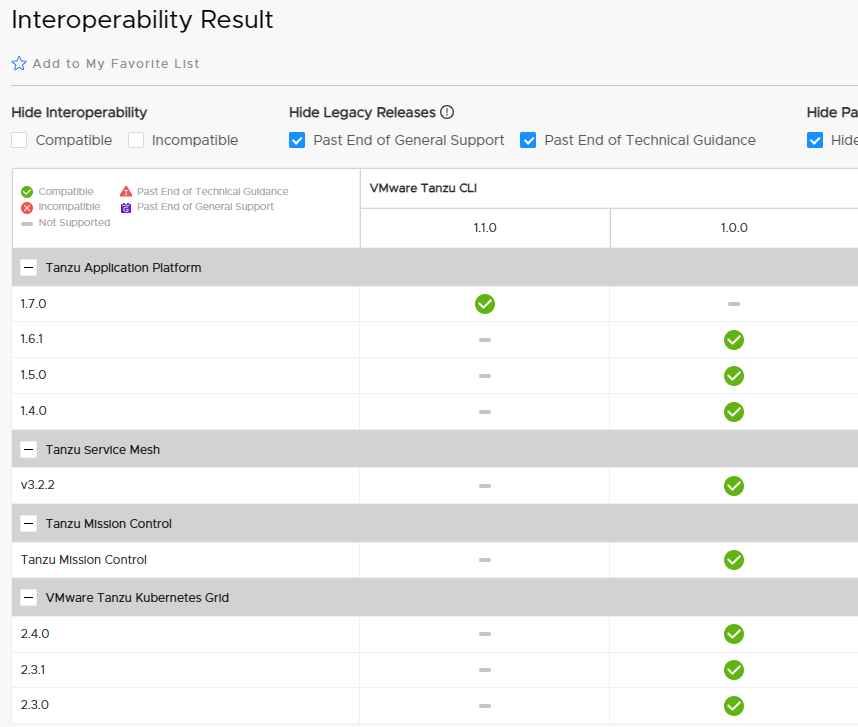

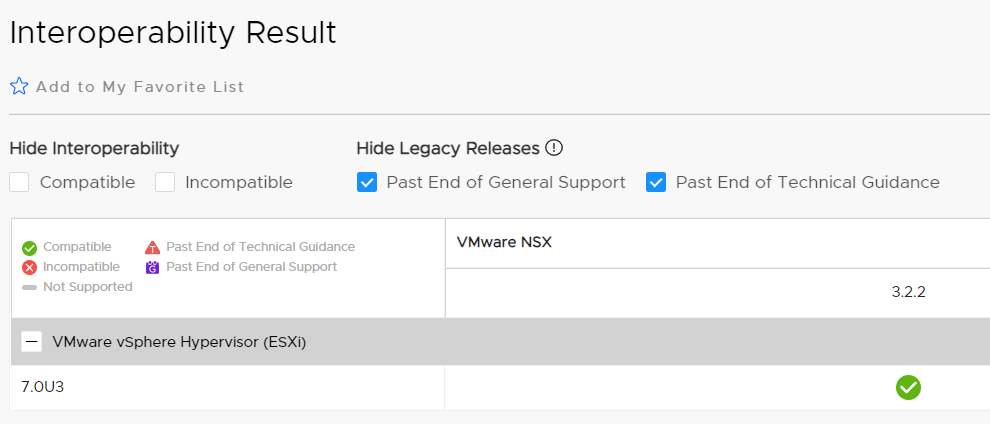

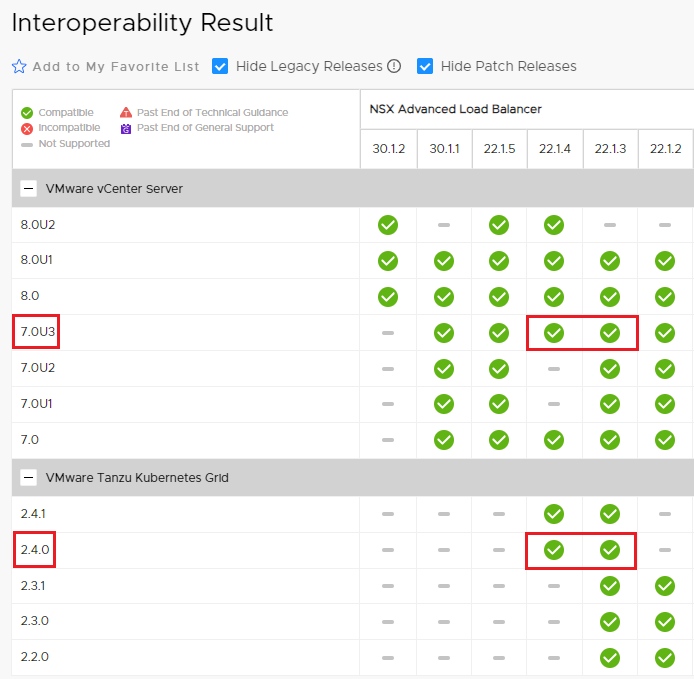

First we need to check which version is in compatibility matrix with the version of vSphere and TKG we are using.

In my case are vSphere 7.0u3 and TKG 2.4.

The check is necessary because not all ALB releases are compatible with vSphere and TKG, let’s look in detail at the matrix for the versions used.

The VMware Product Interoperability Matrix is available at this link.

The VMware Product Interoperability Matrix is available at this link.

The versions of ALB that can be used are 22.1.4 and 22.1.3; I chose the most recent.

The latest patches should also be applied to Release 22.1.4.

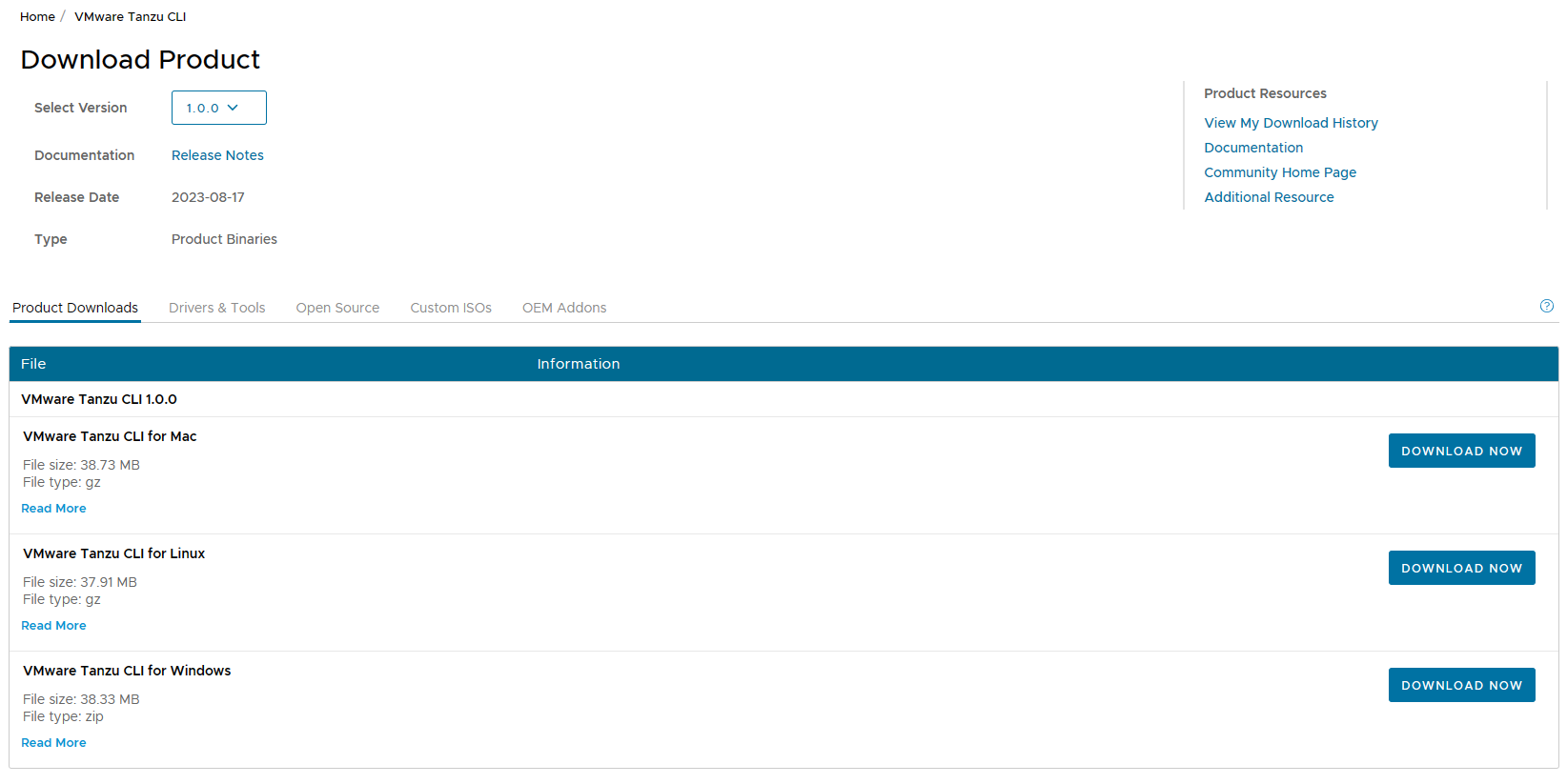

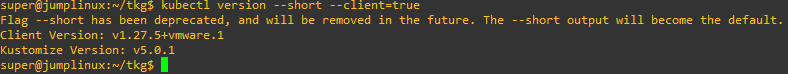

The files to download from the VMware site are as follows:

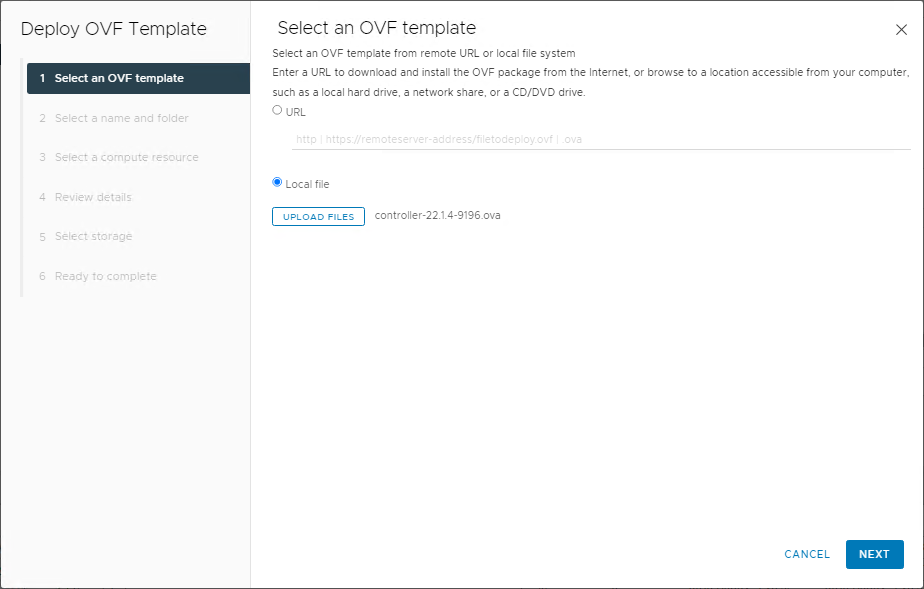

controller-22.1.4-9196.ova + avi_patch-22.1.4-2p6-9007.pkg

NOTE: Before starting the OVA deployment, verify that you have created the DNS records (A+PTR) for the controllers and the cluster VIP.

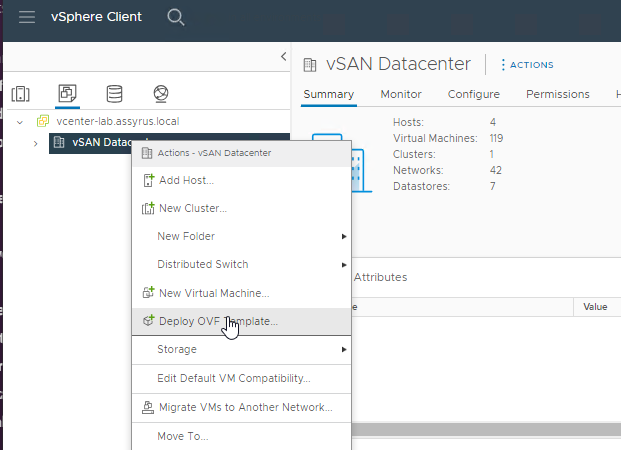

Start the deployment of the controller on our vSphere cluster.

Select the OVA file

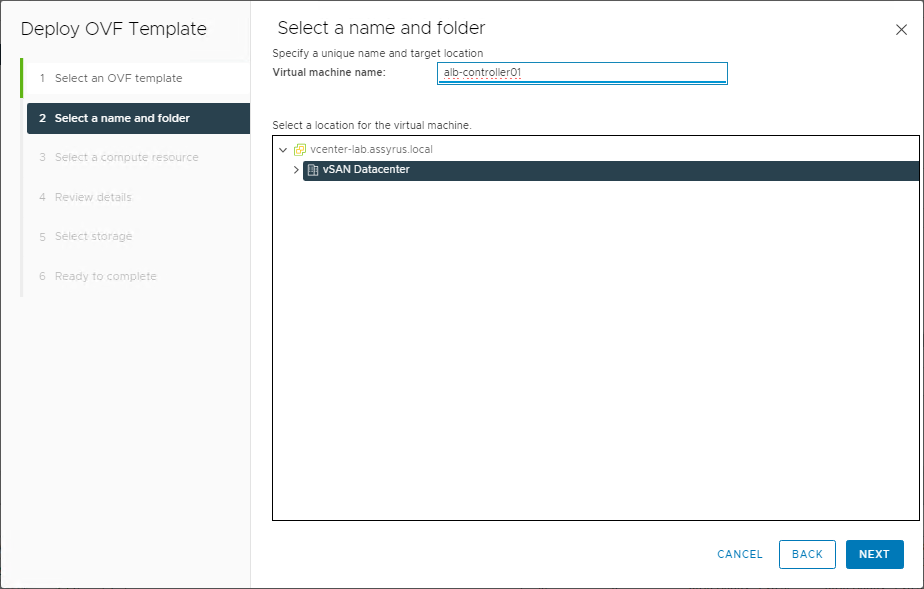

Name the VM and select the target Datacenter and Cluster

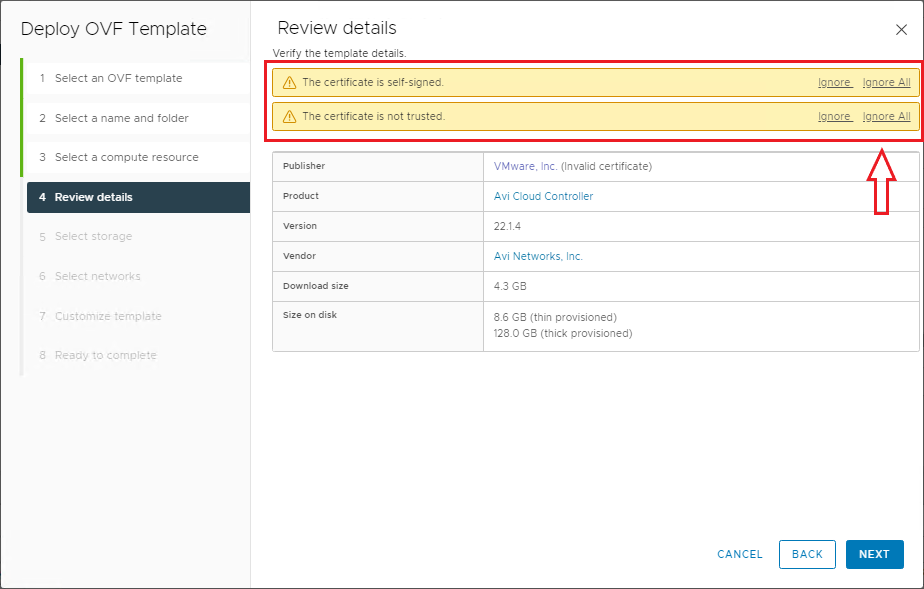

On summary ignore certificate warnings, next

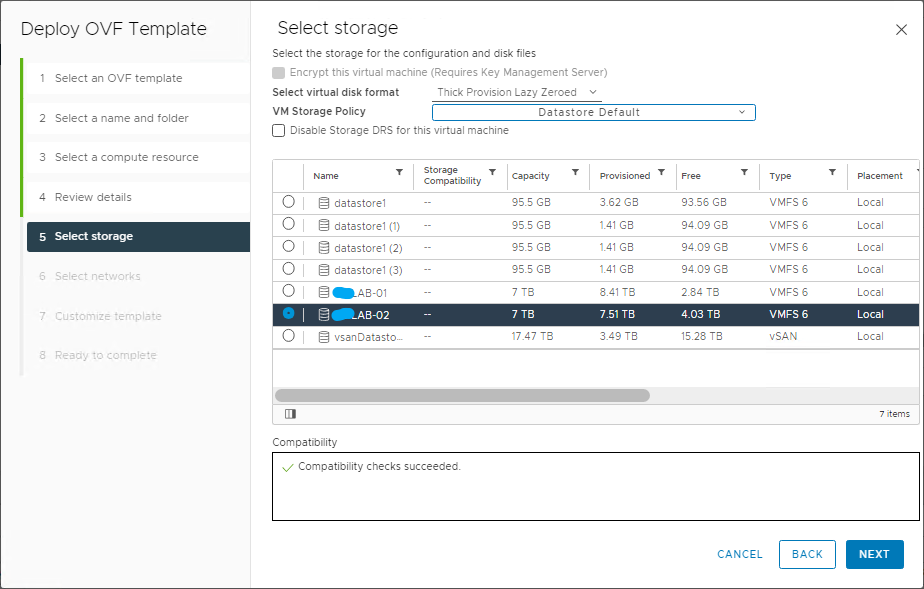

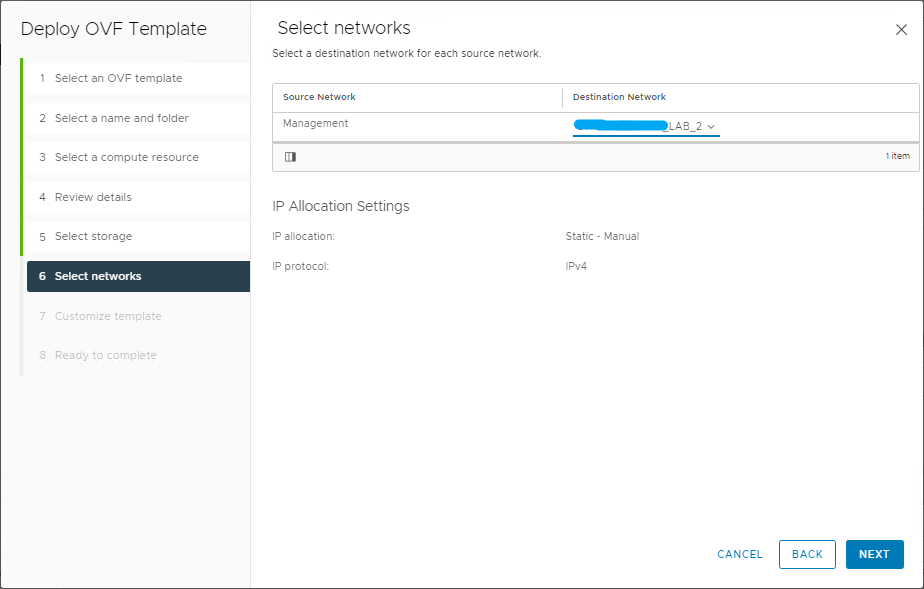

In the next steps, select the datastore and controller management network

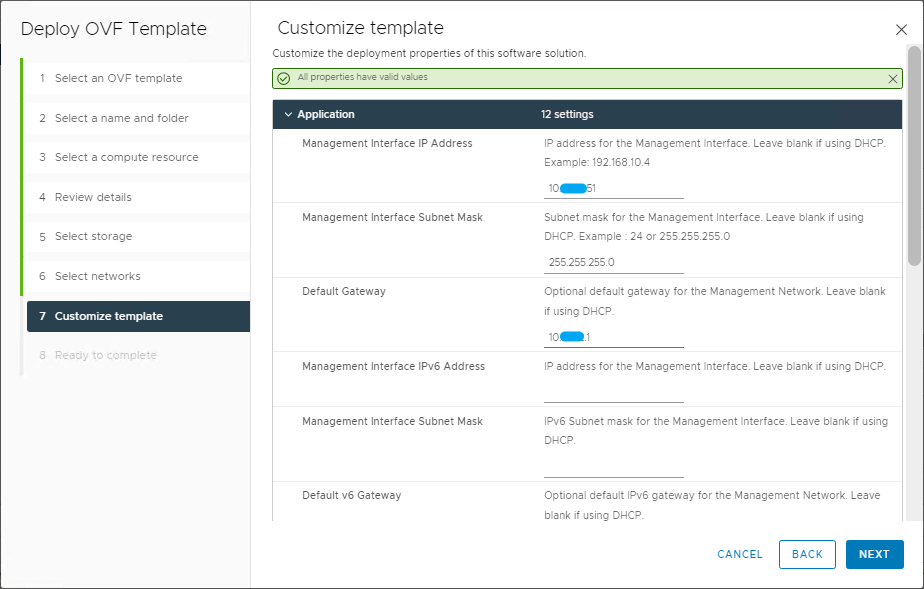

Now enter the settings for the controller management interface

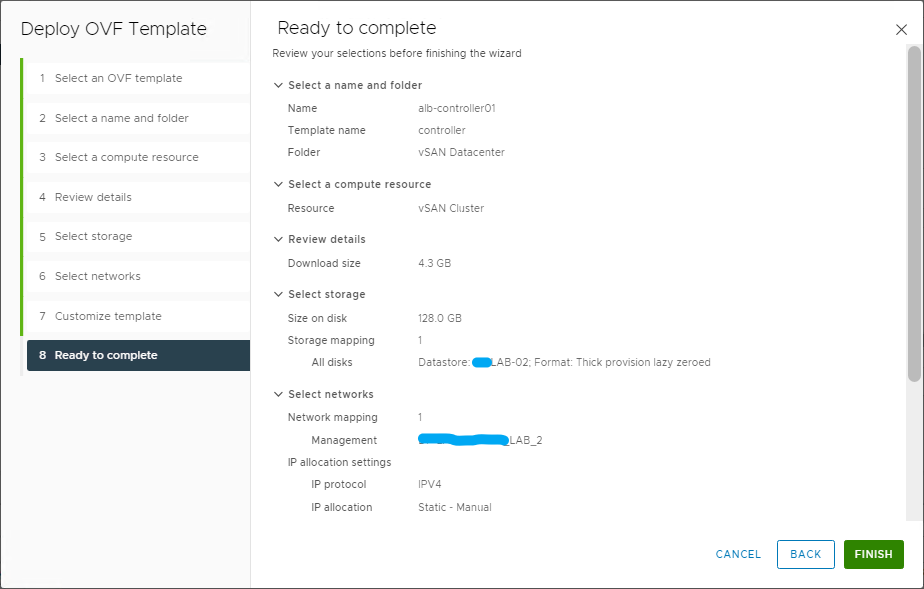

Select FINISH to start deployment

Turn on the VM and wait for the services to be active (takes a while)

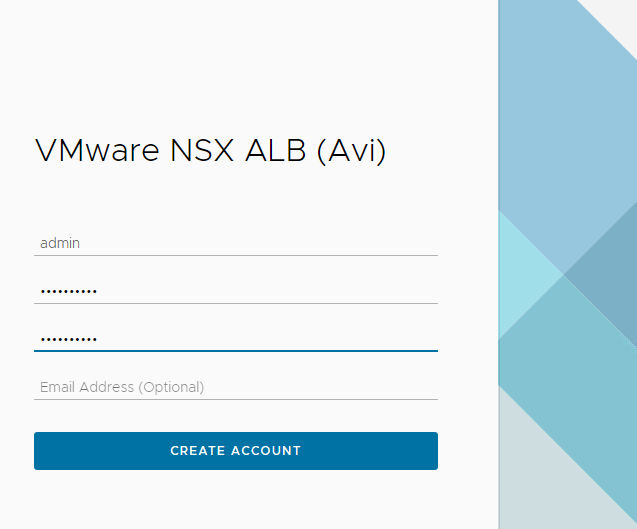

The first time you log in, you will need to enter the admin user password

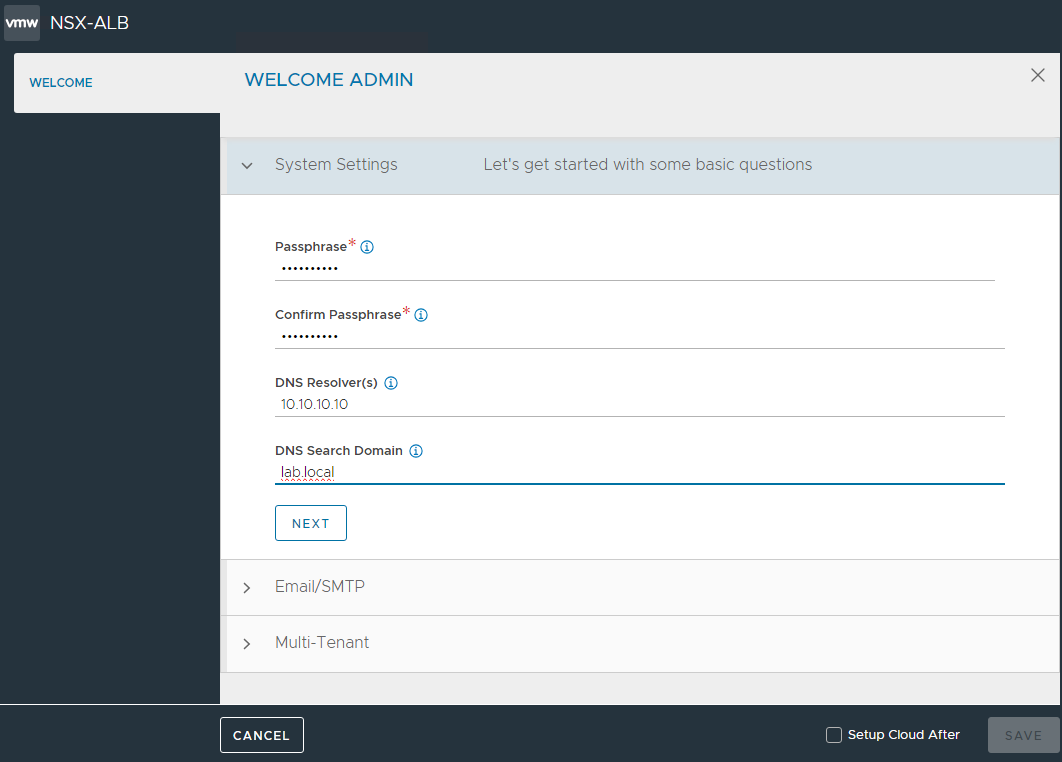

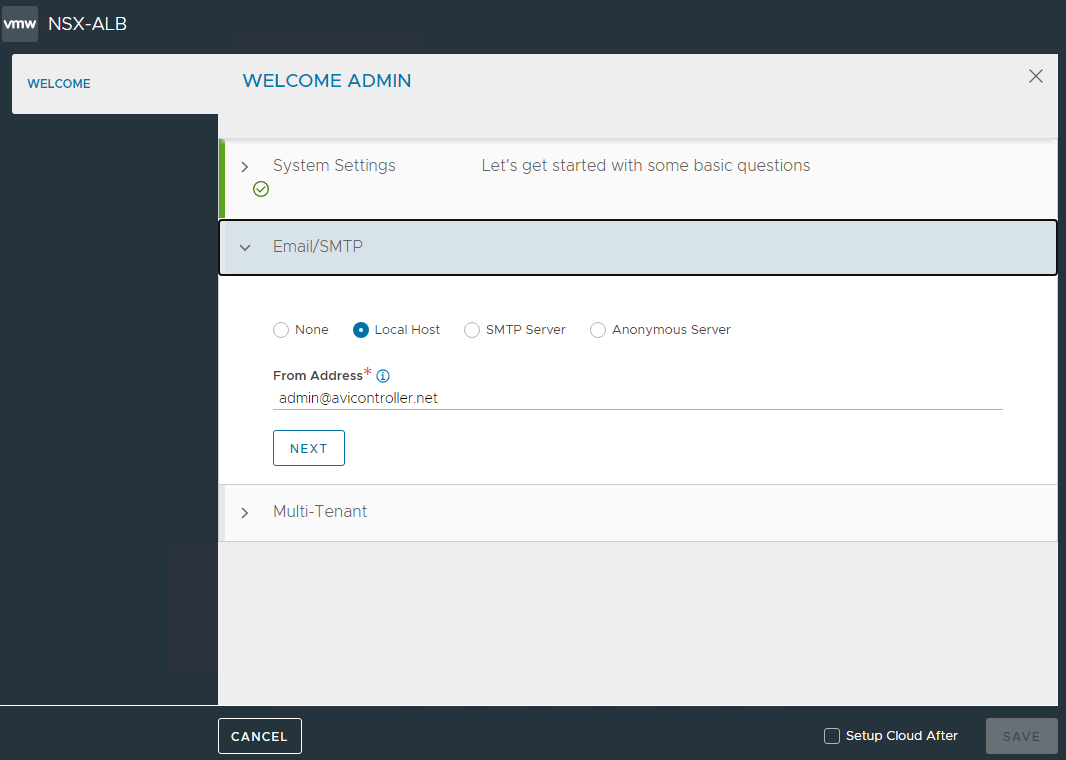

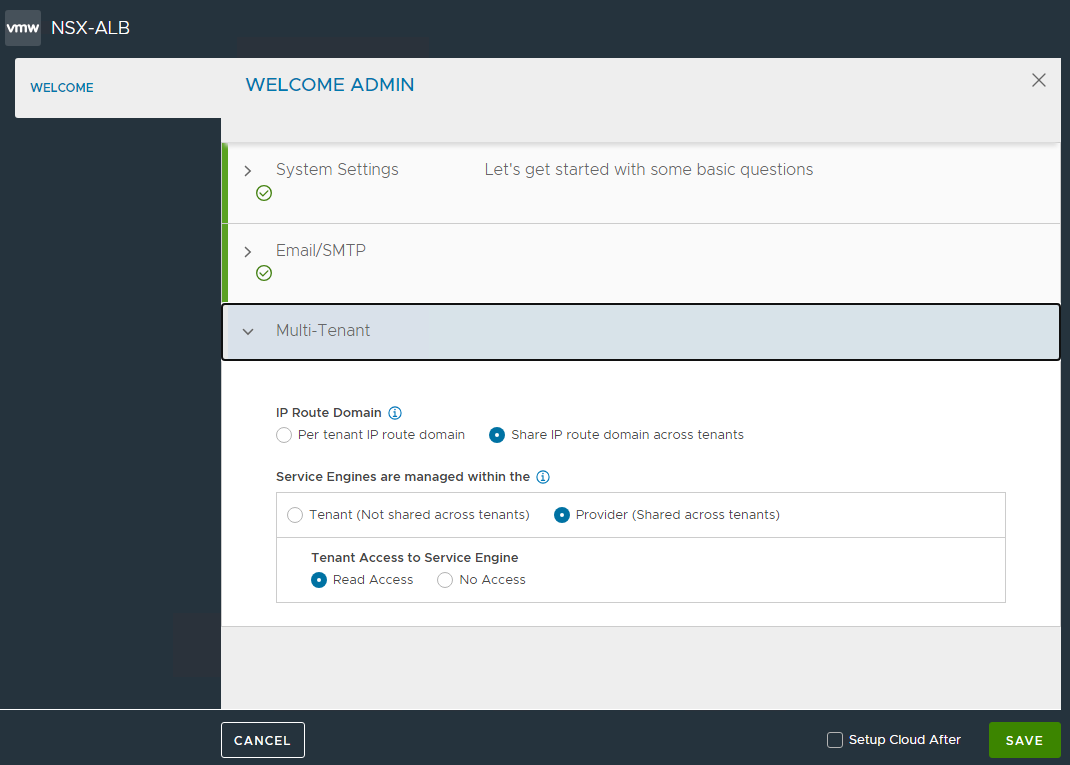

Enter the information necessary to complete the configuration and SAVE

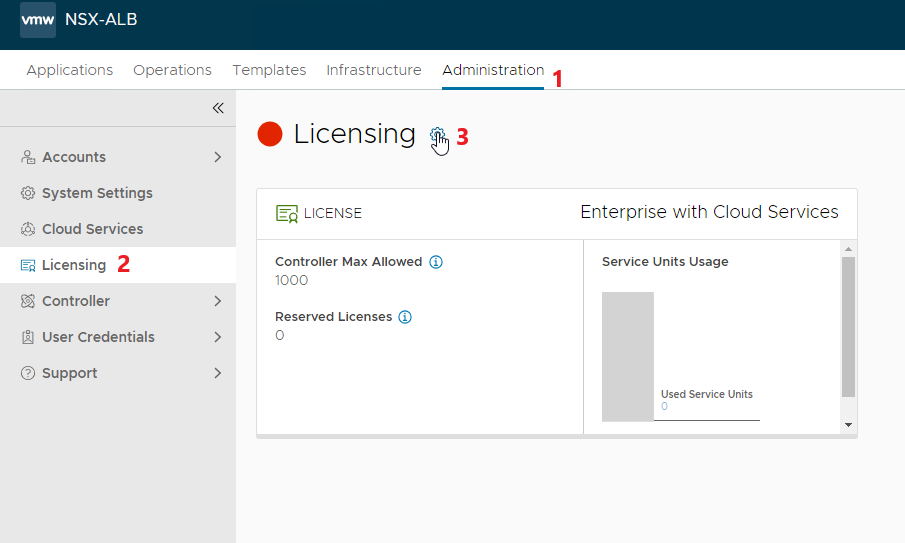

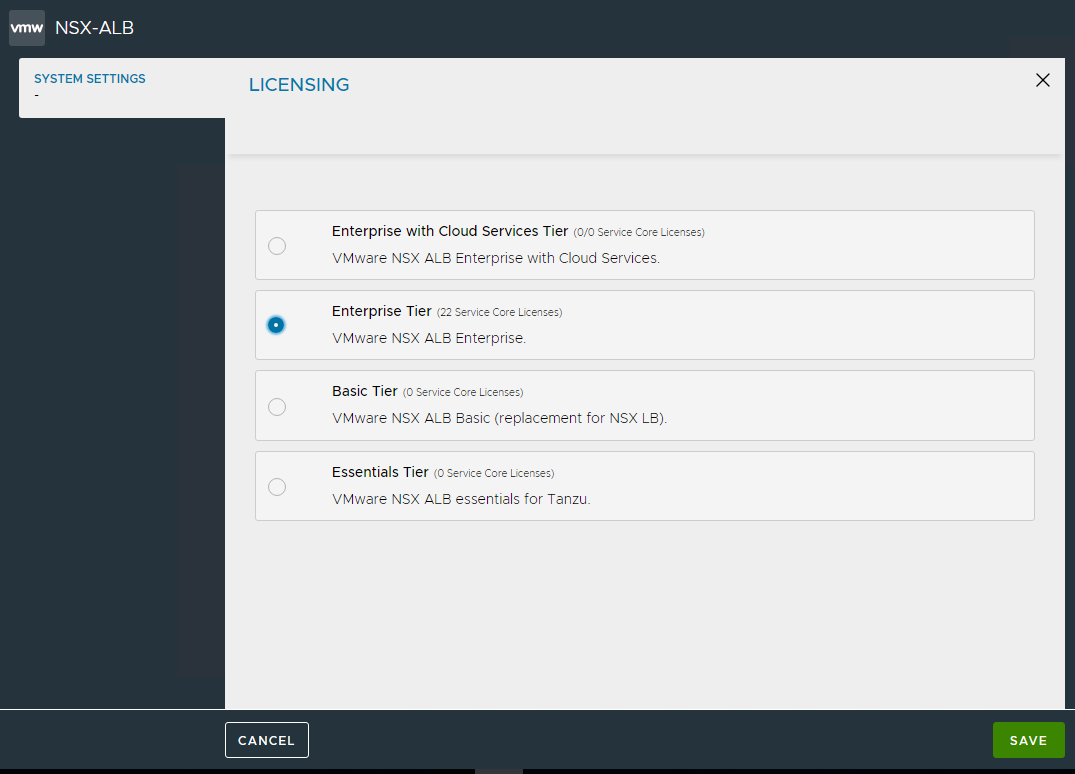

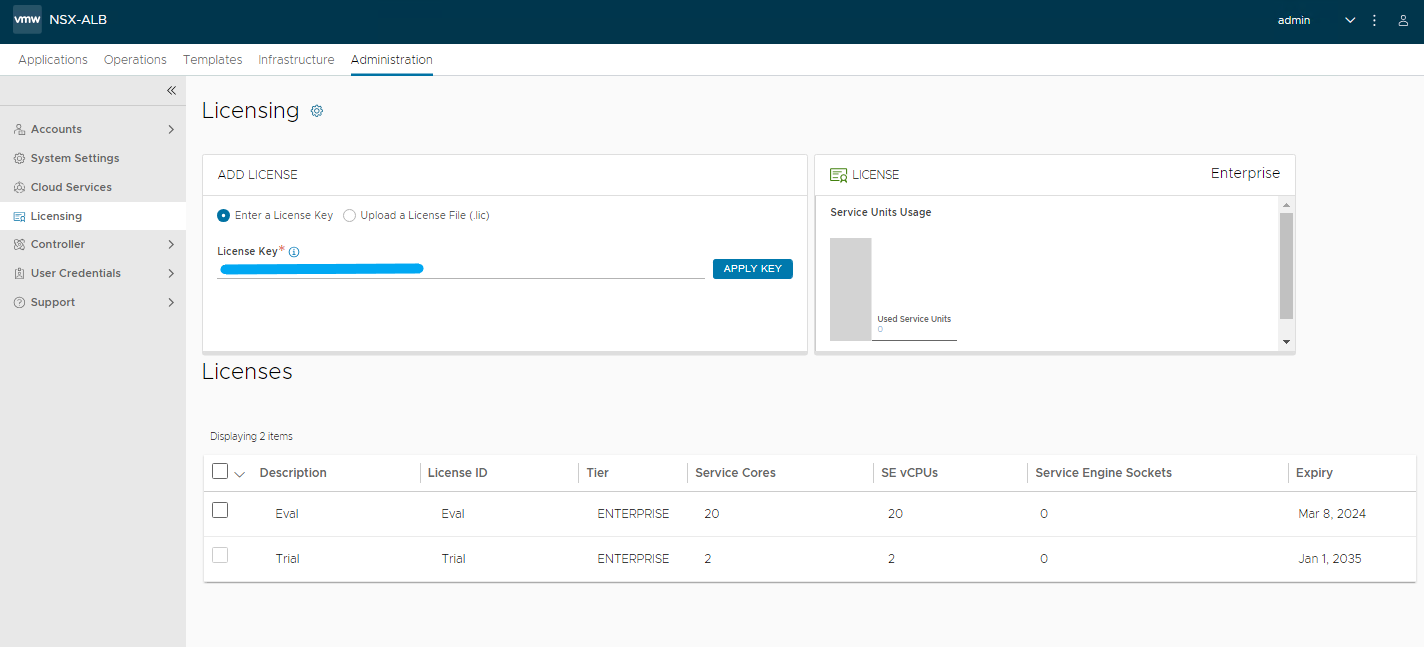

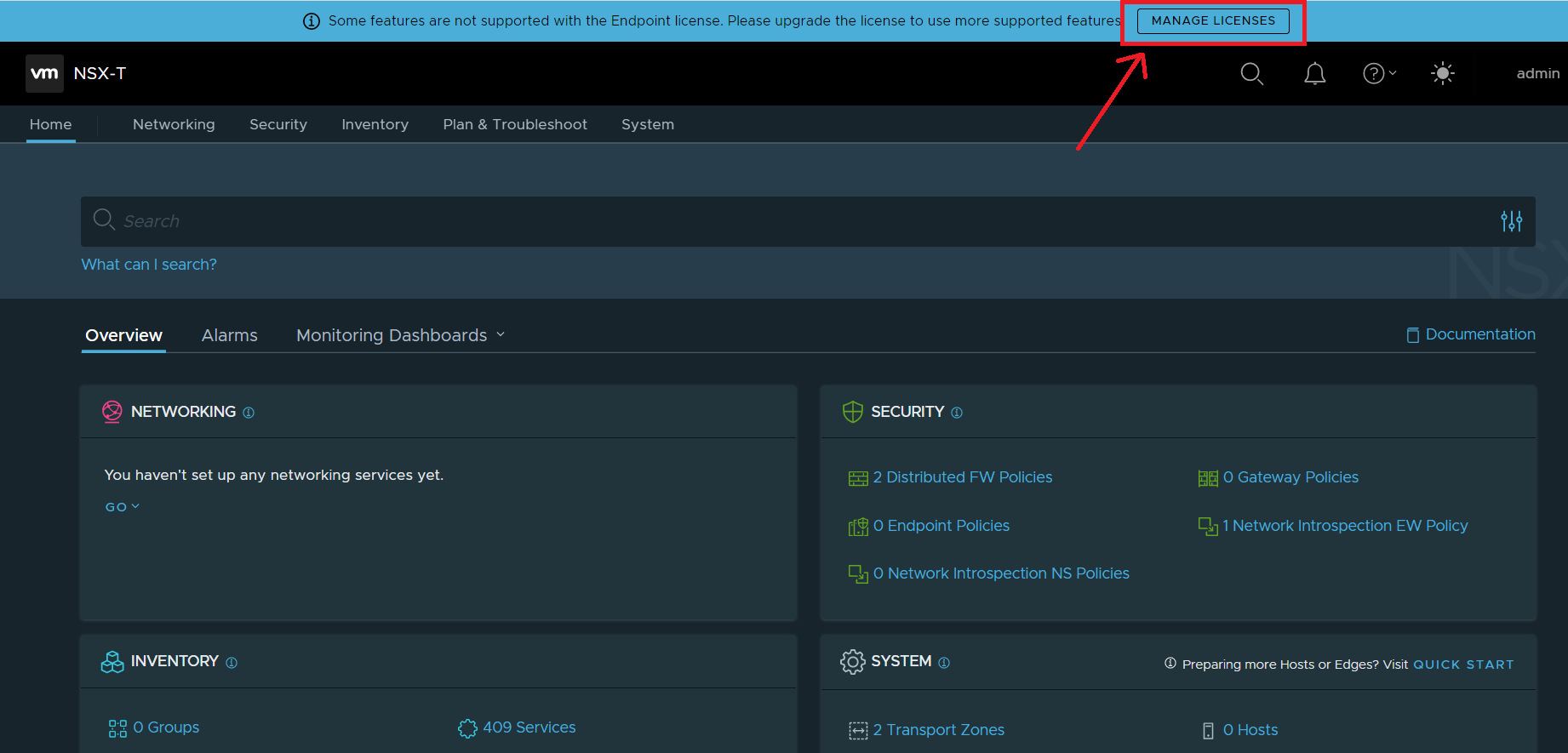

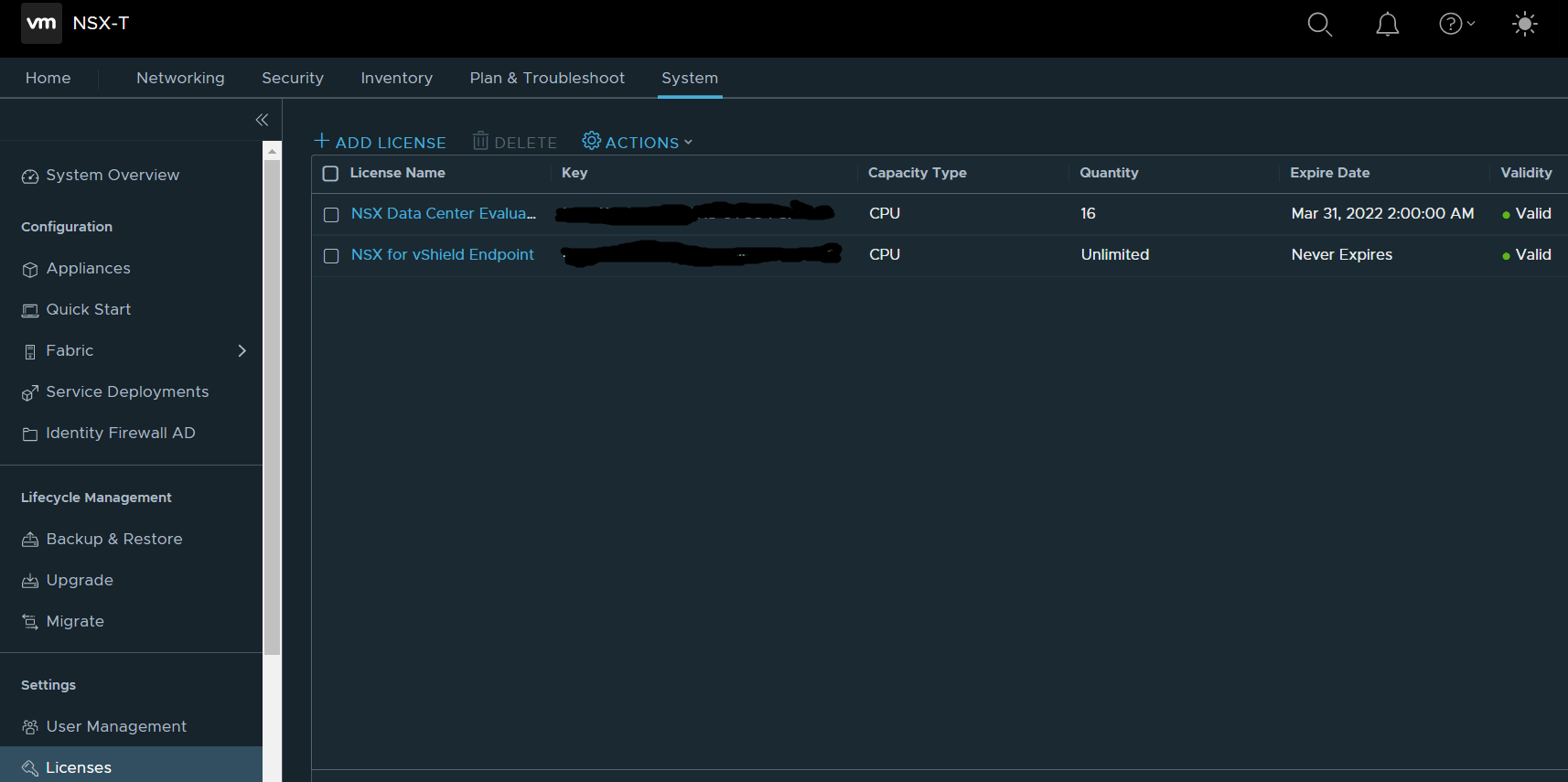

First we apply the type of license to use, for the lab I used the Enterprise with 1 month of EVAL

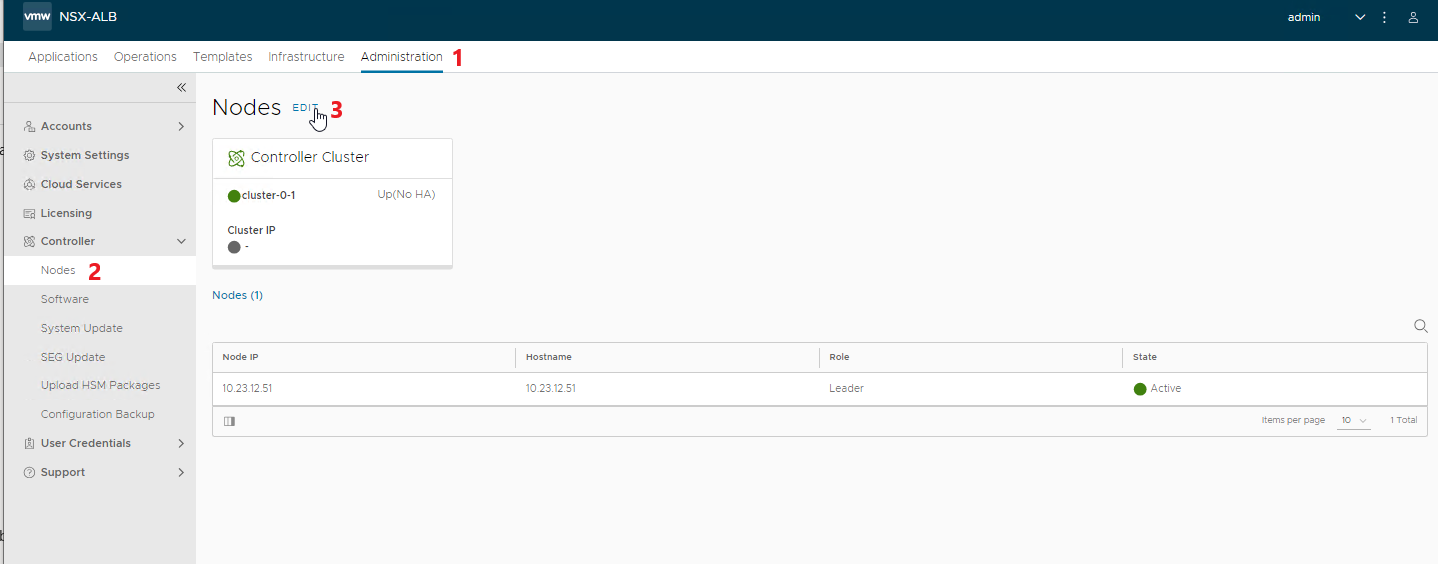

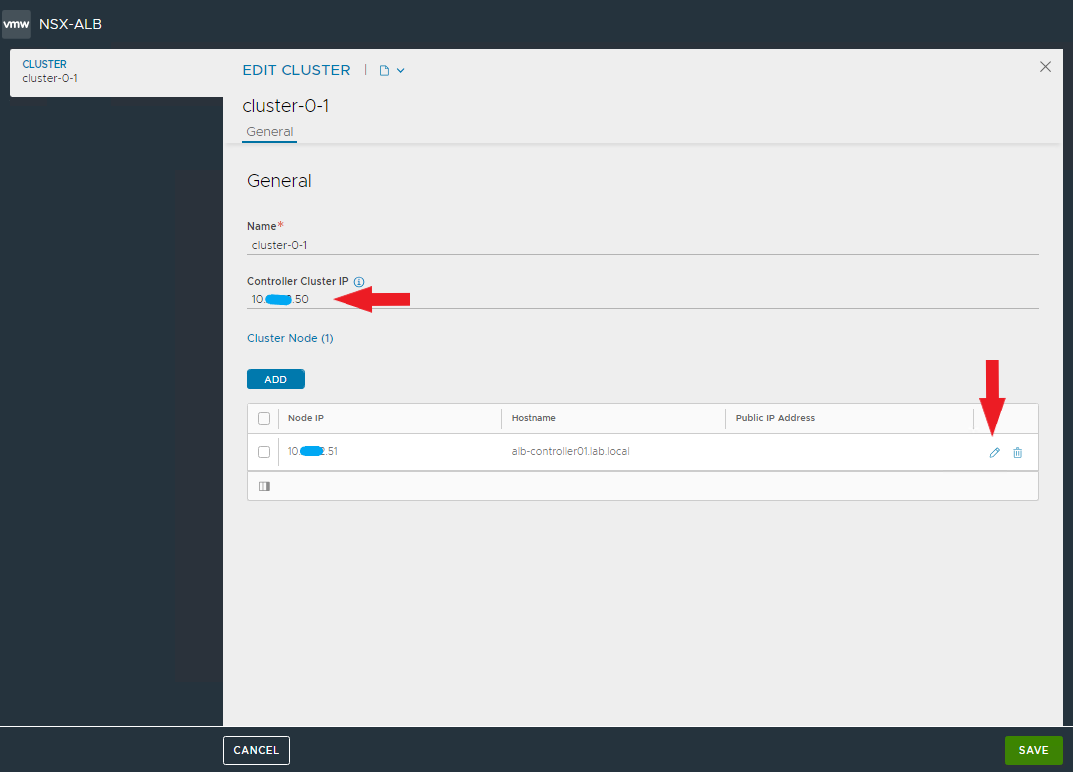

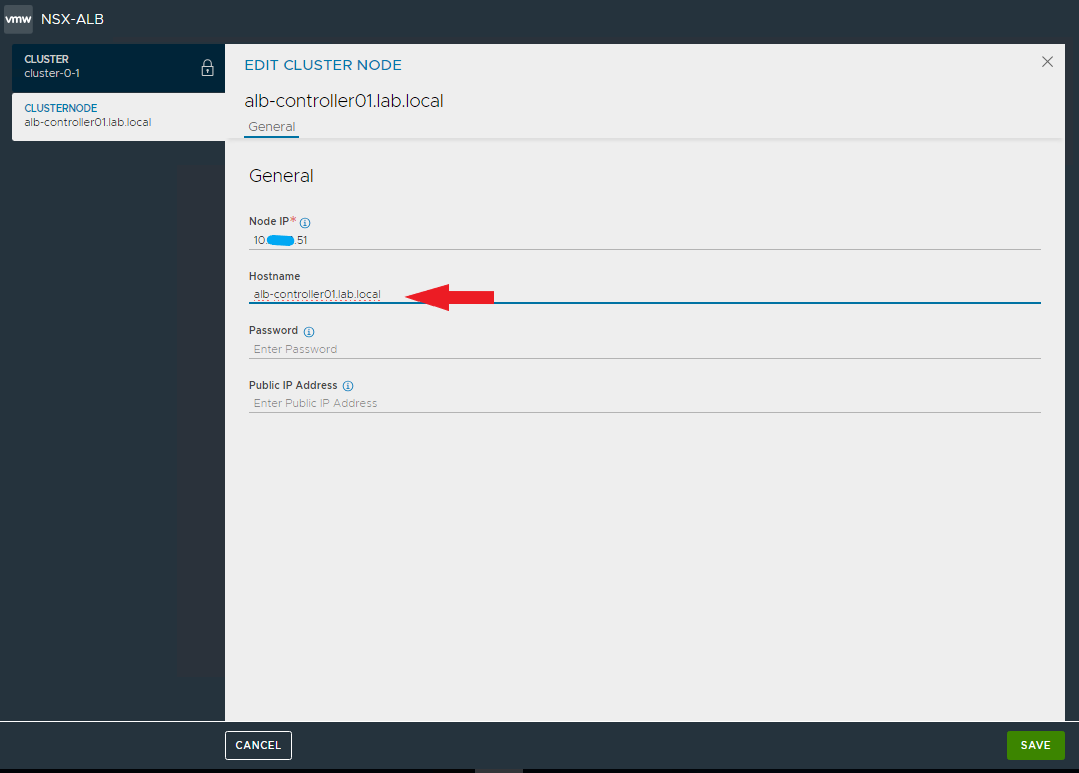

Change the cluster and node settings by entering the virtual address and FQDN, then SAVE.

NOTE: after saving the settings you will lose connectivity with the node for a few seconds

Connect to the controller using the VIP address

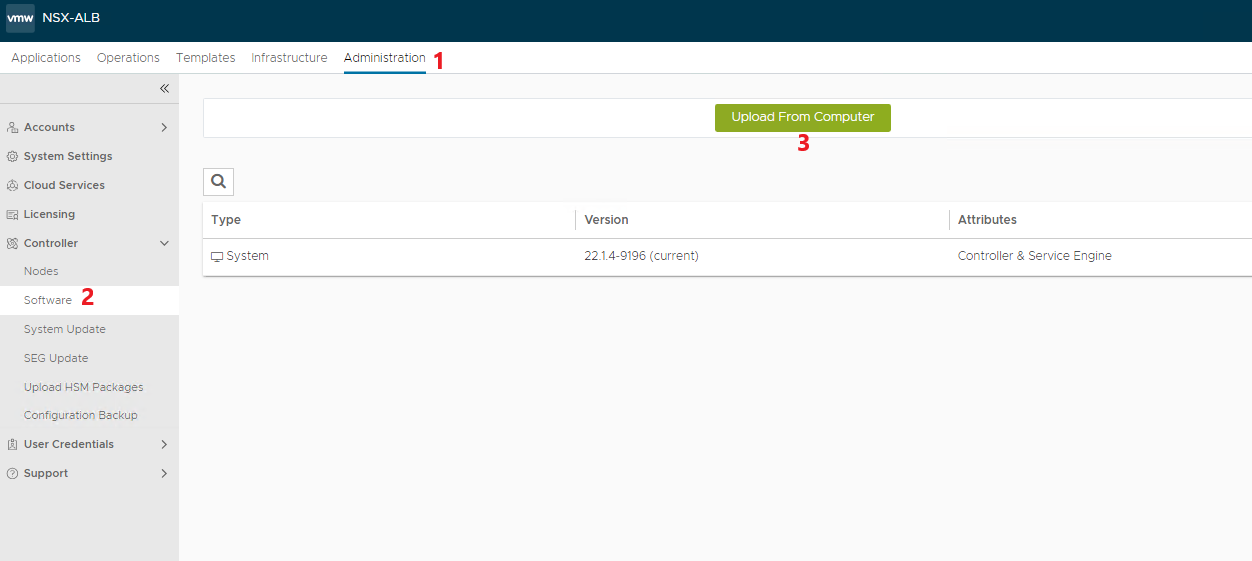

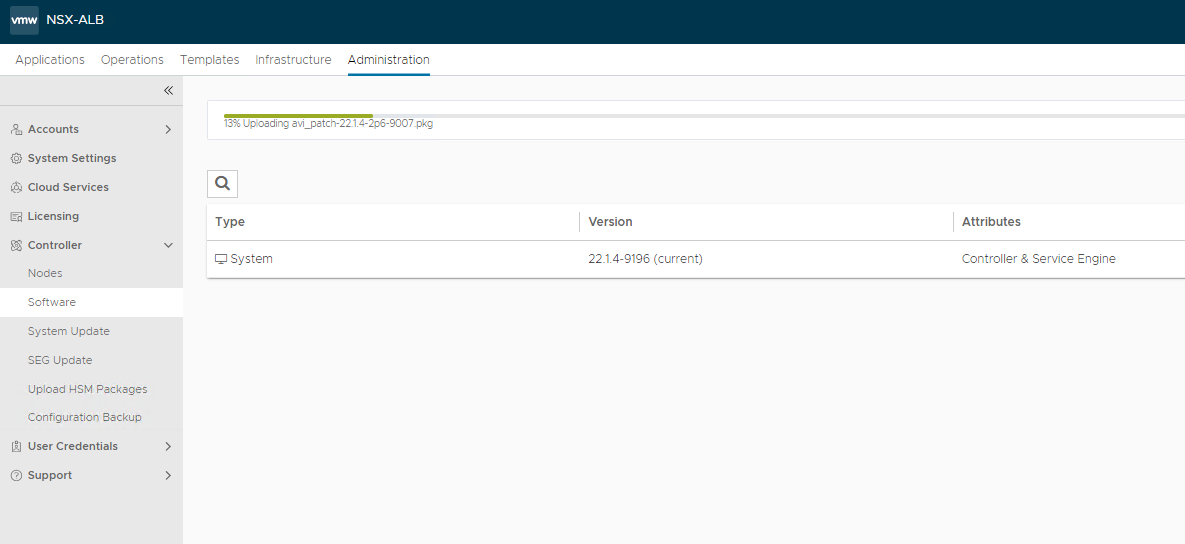

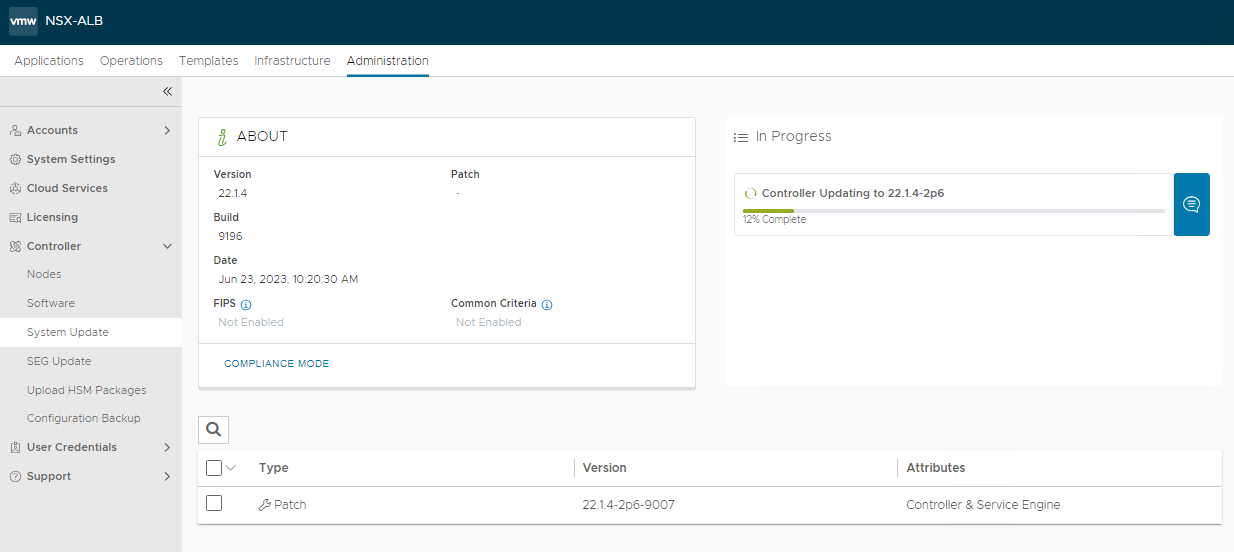

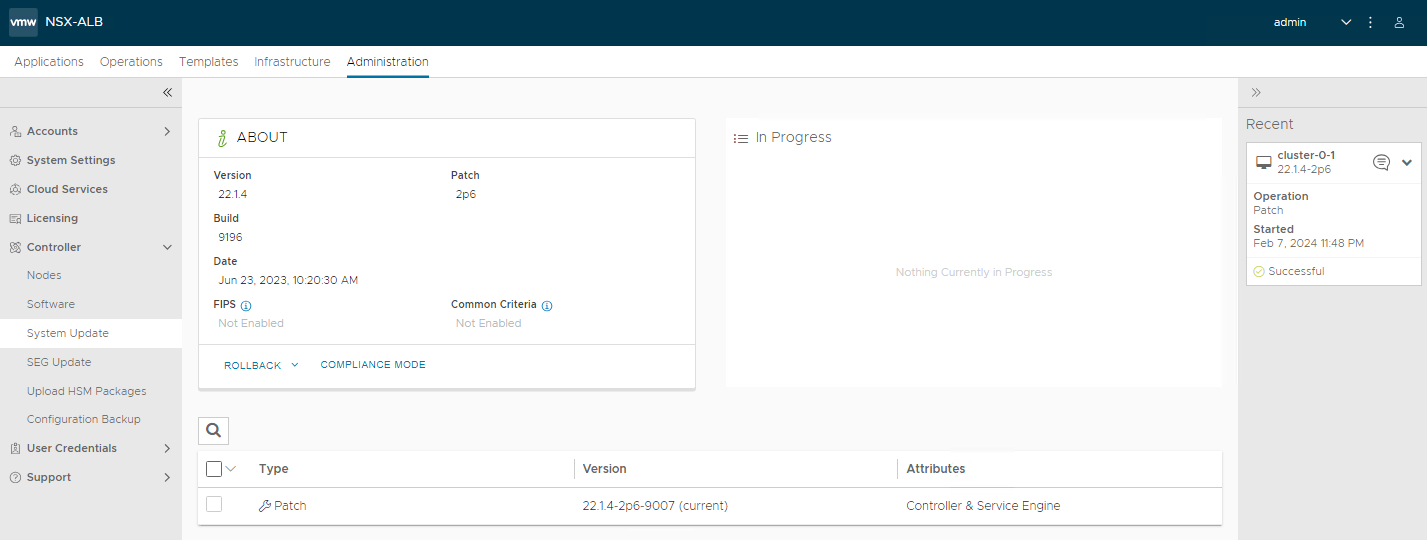

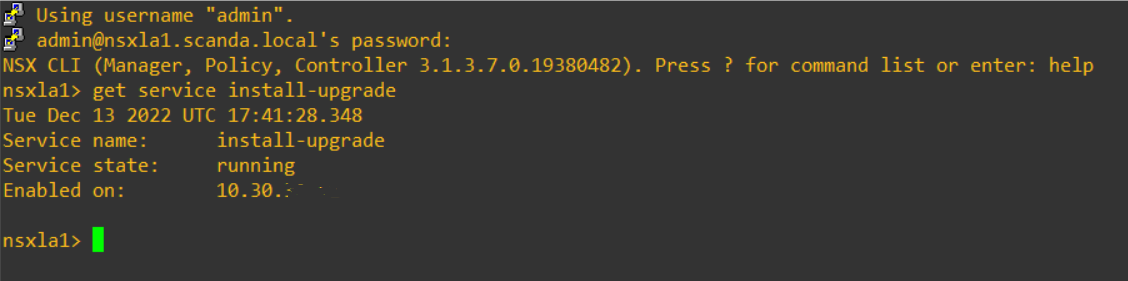

Apply the latest available patches

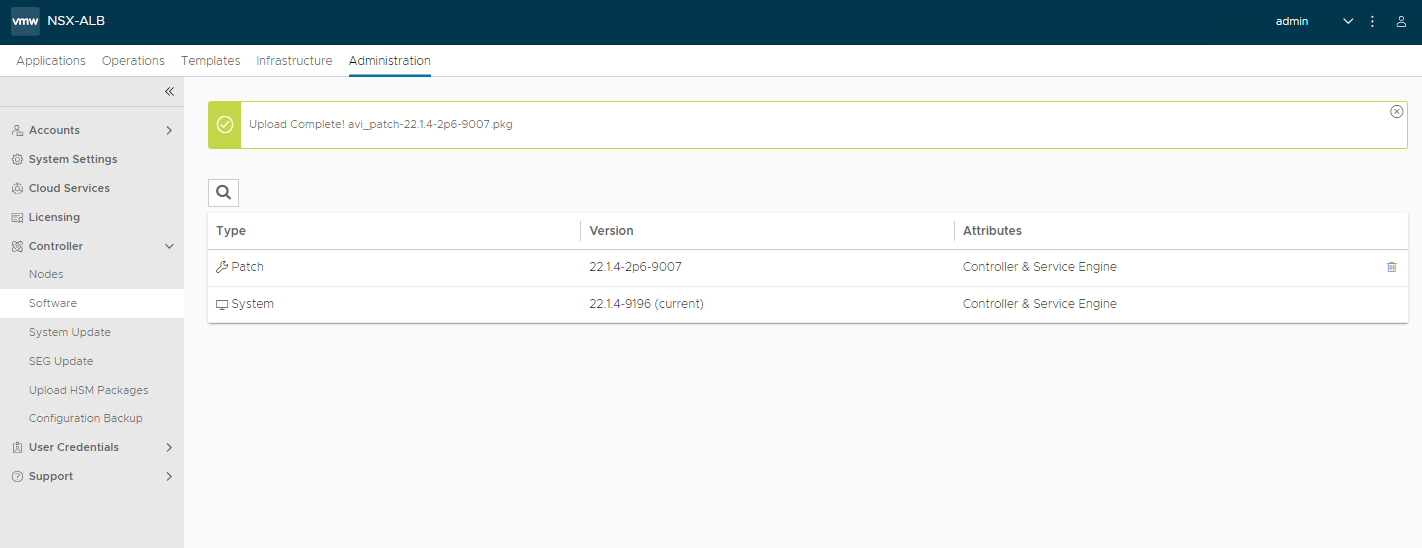

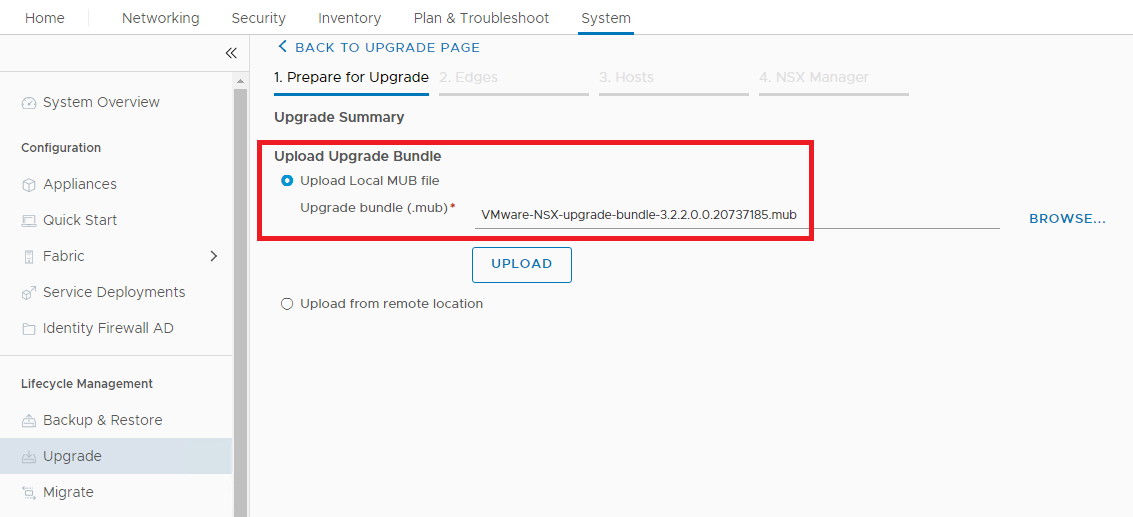

Select the previously downloaded file and upload it

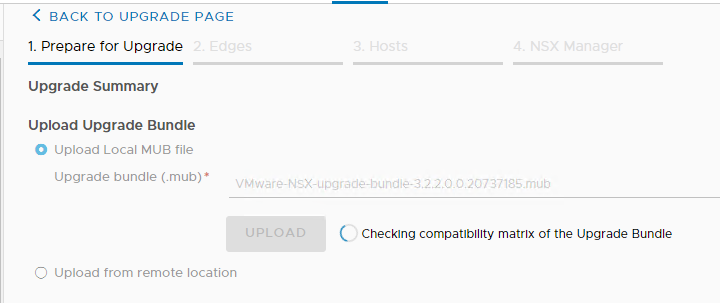

Waiting for the upload to complete successfully

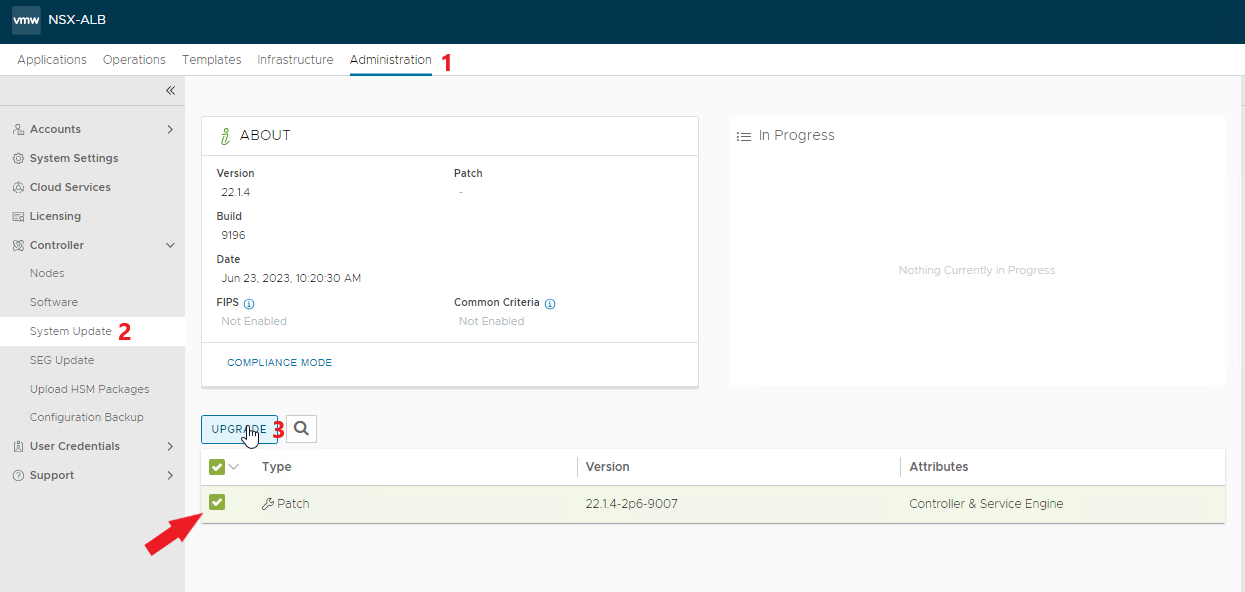

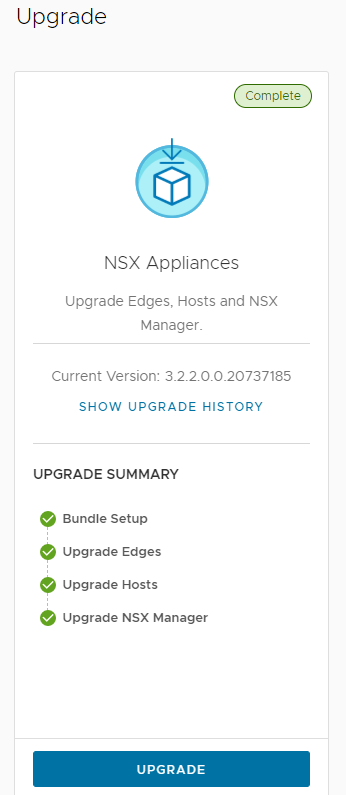

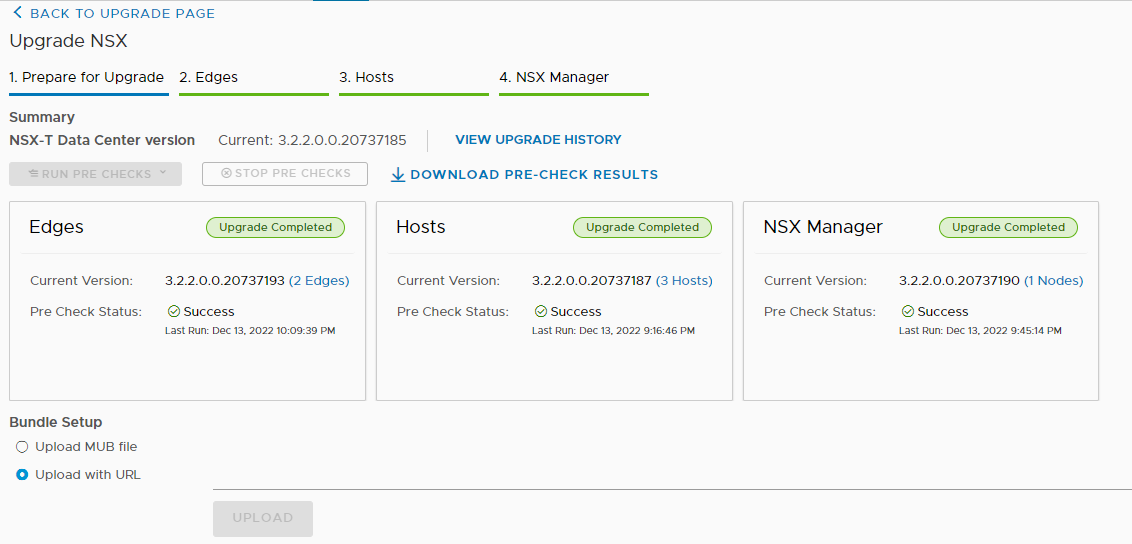

Start the upgrade

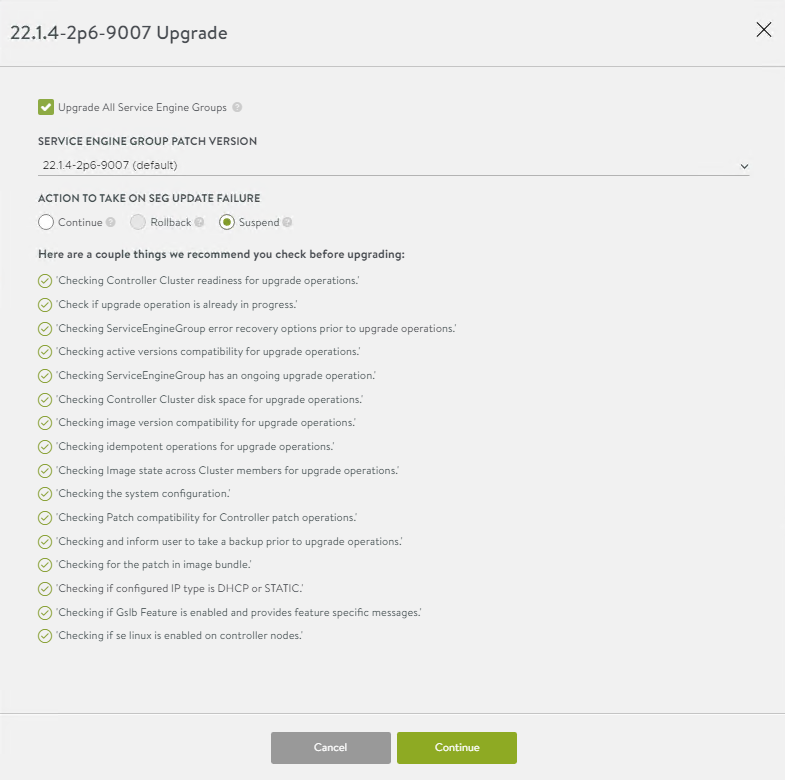

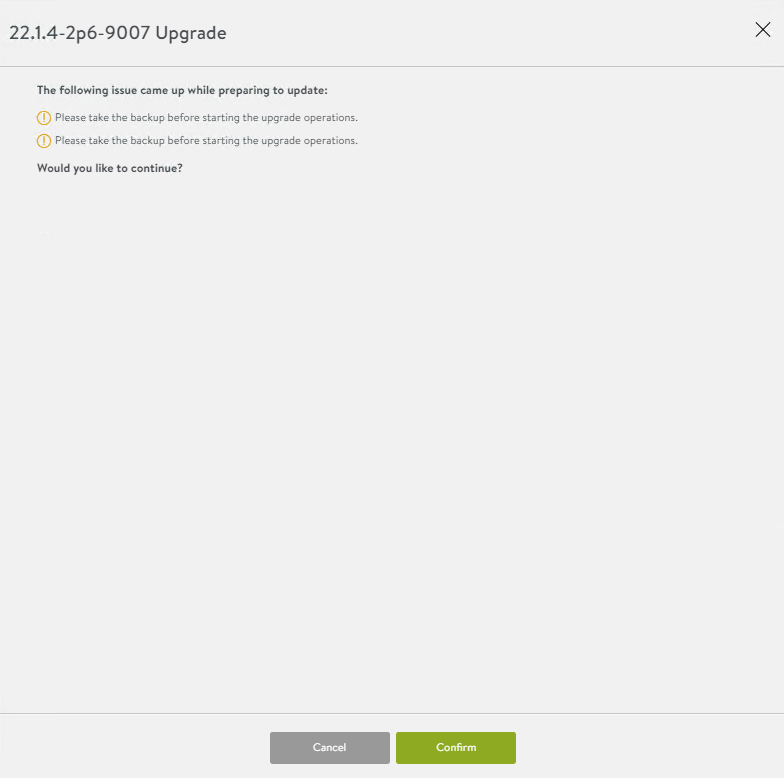

Leave the default settings and confirm

Follow the upgrade process, during the upgrade the controller will reboot

Patch applied!

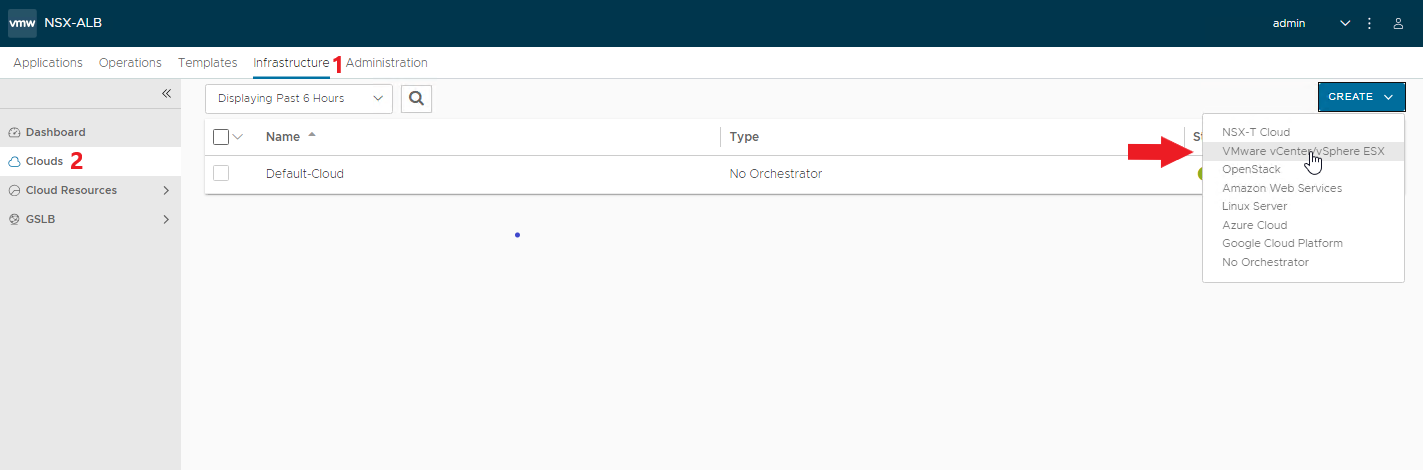

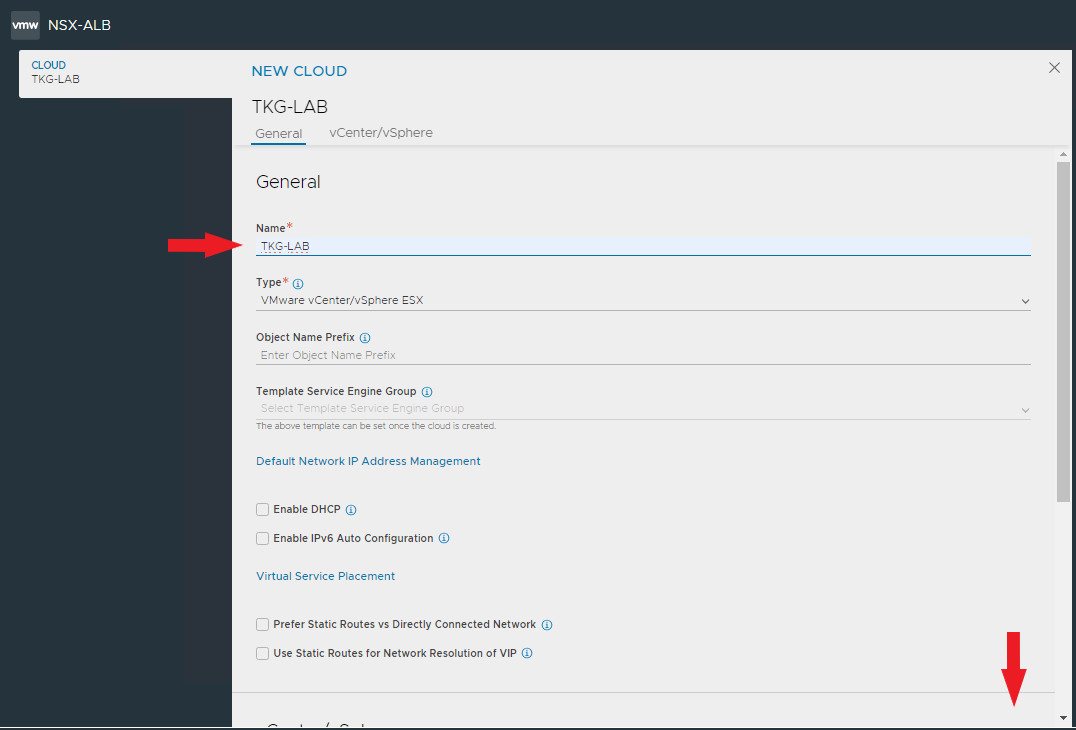

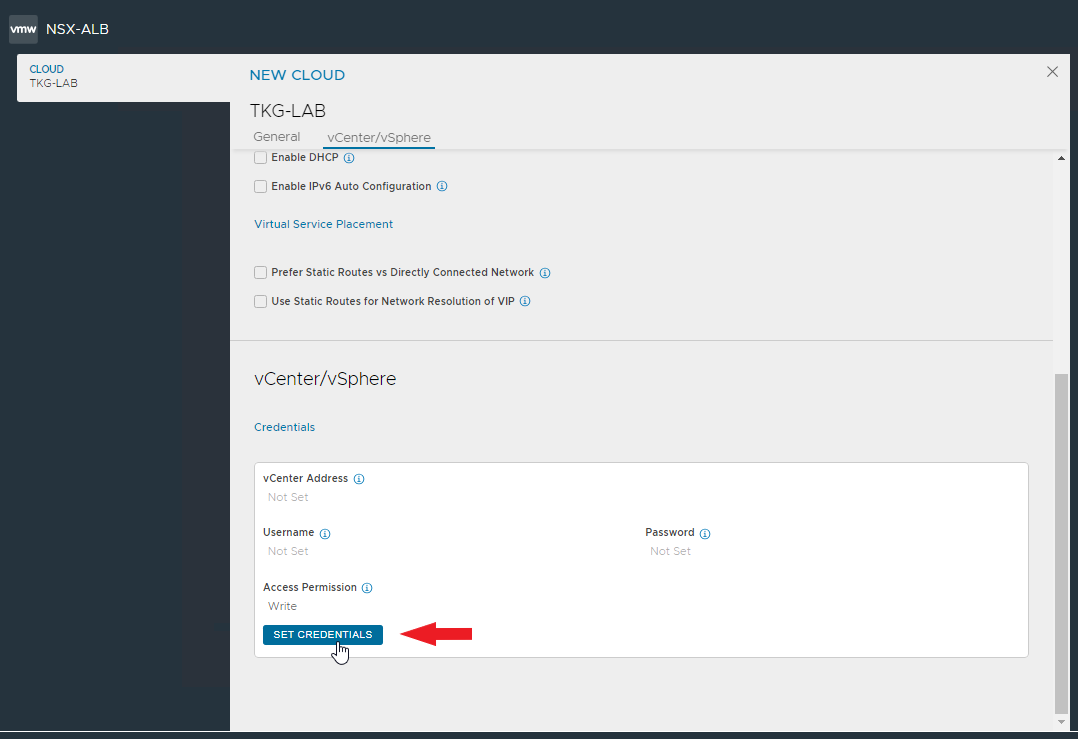

Create the Cloud zone associated with our vCenter for automatic deployment of Service Engines

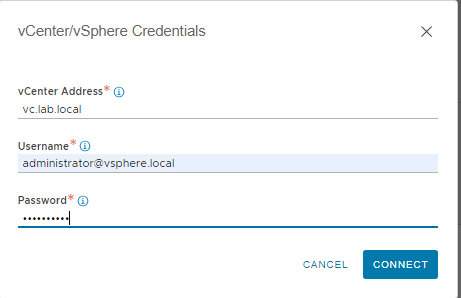

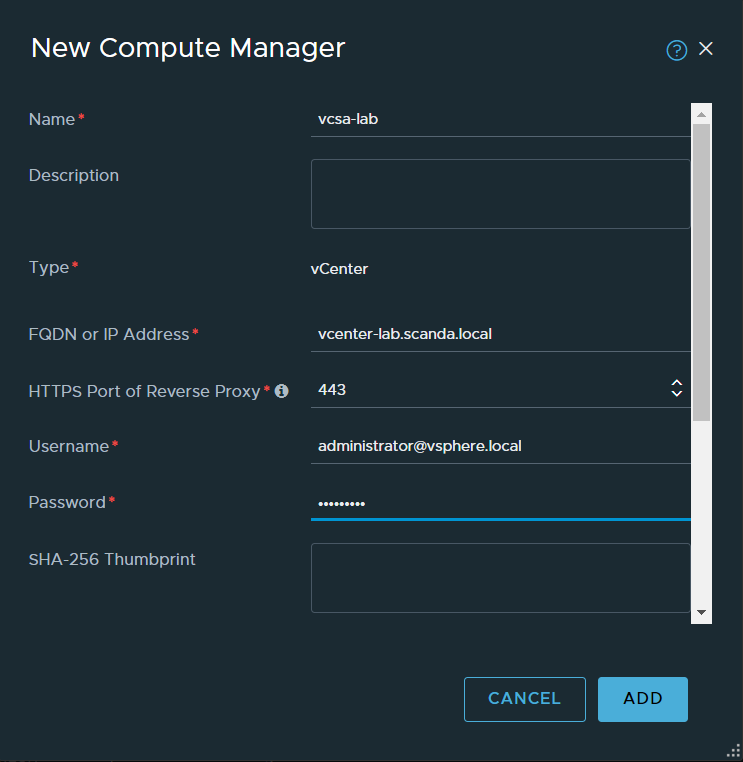

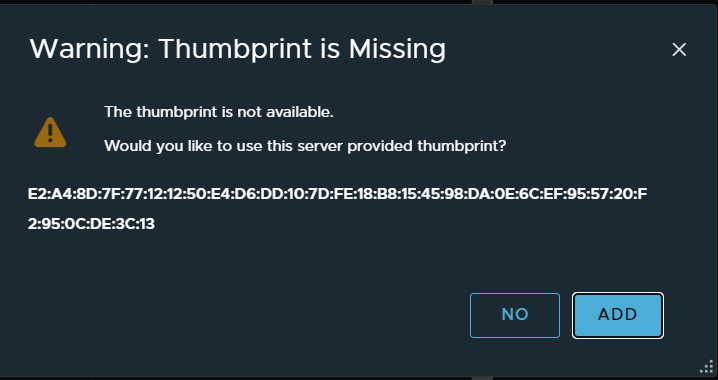

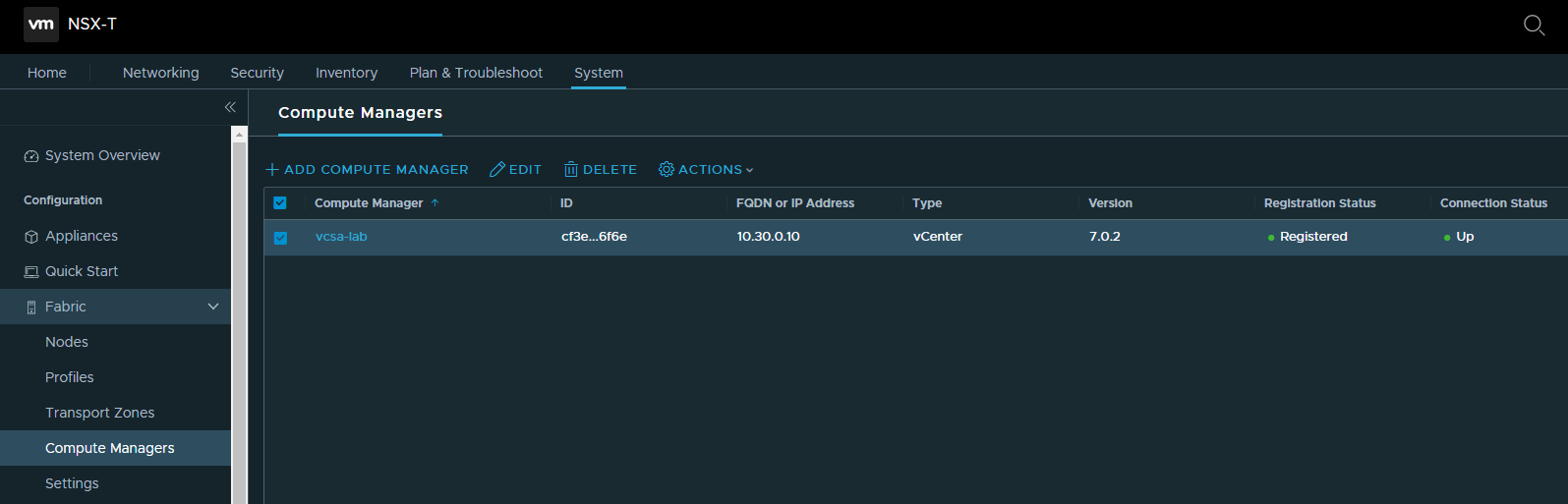

Enter the Cloud name and the vcenter connection data

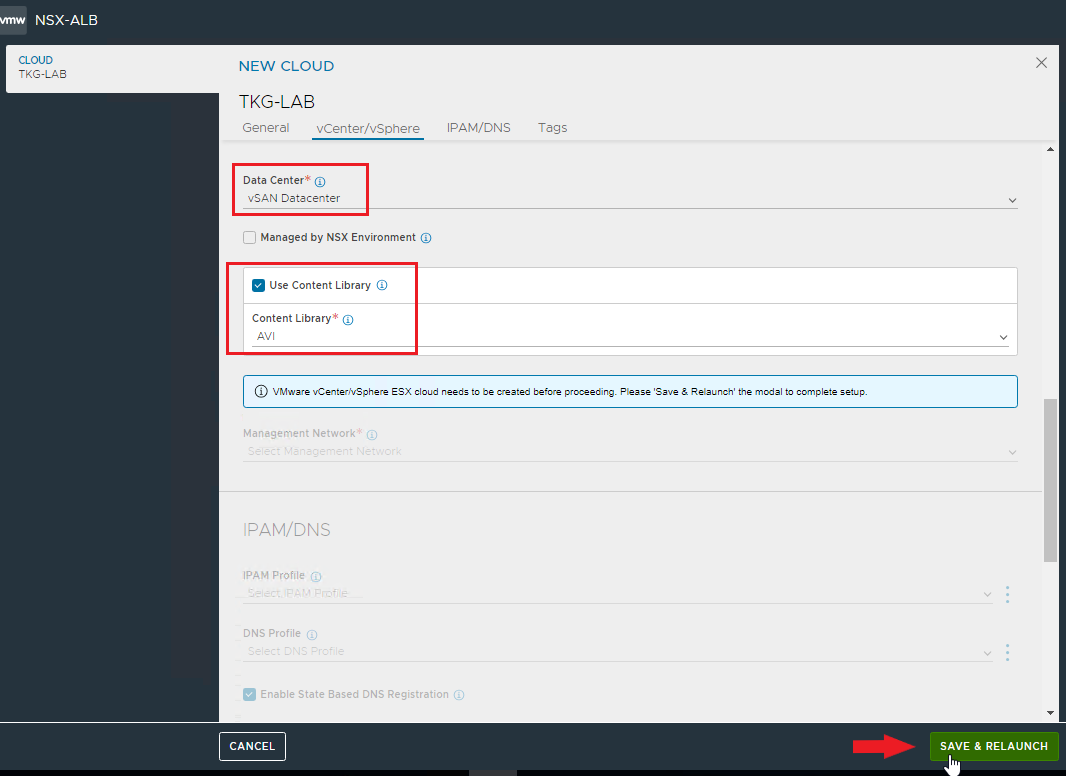

Select the Datacenter, the Content Library if any. SAVE & RELAUNCH

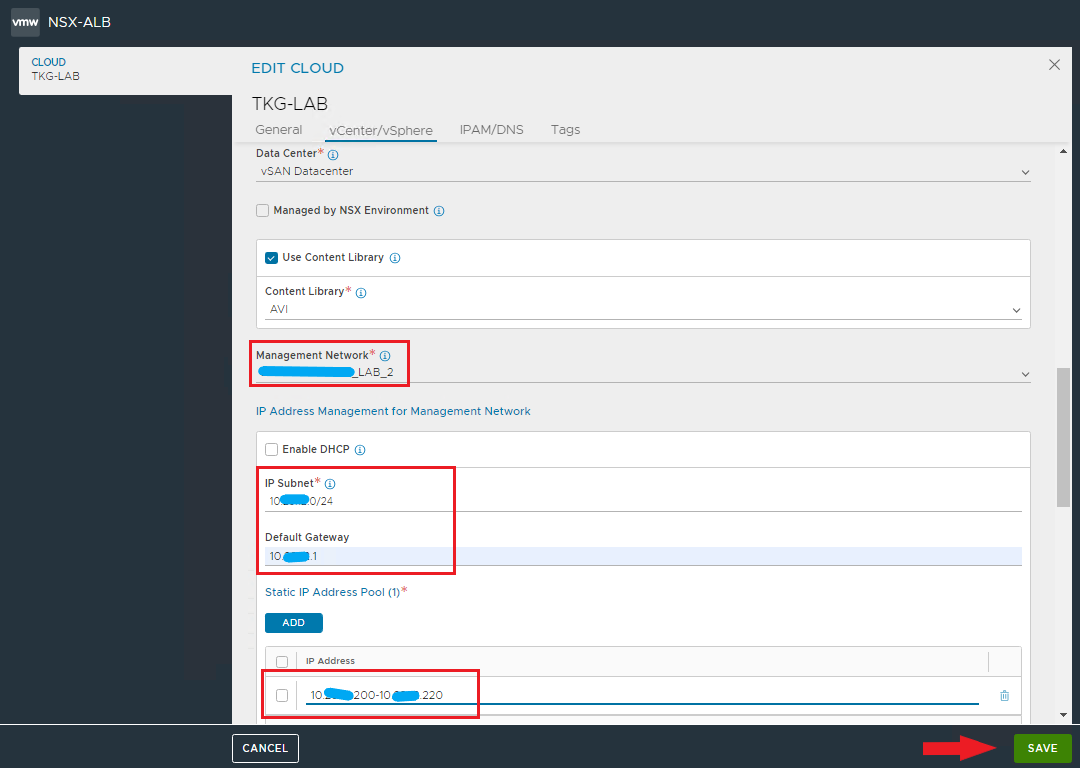

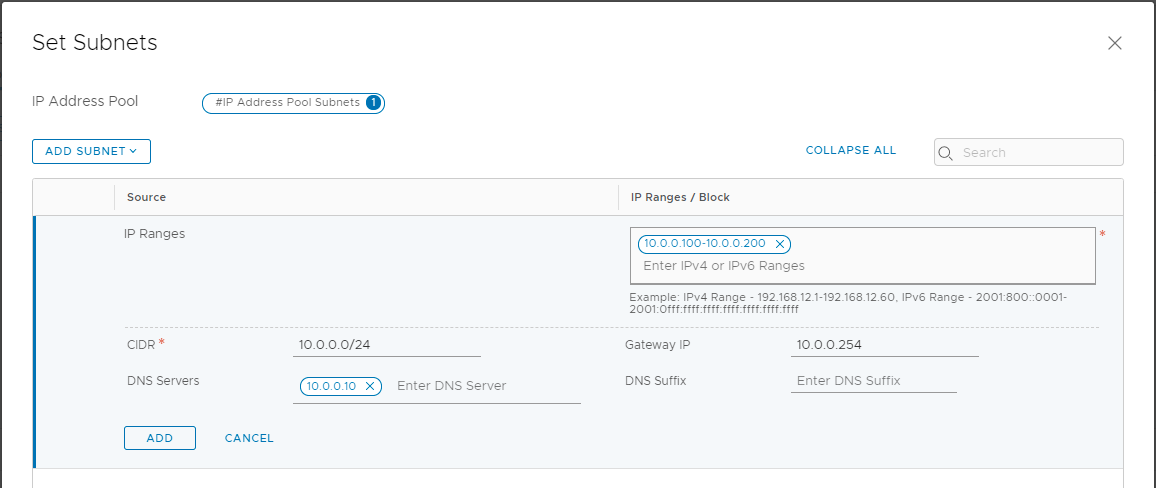

Select management network, subnet, defaut GW and specify the pool for assigning static ip address to Service Engines.

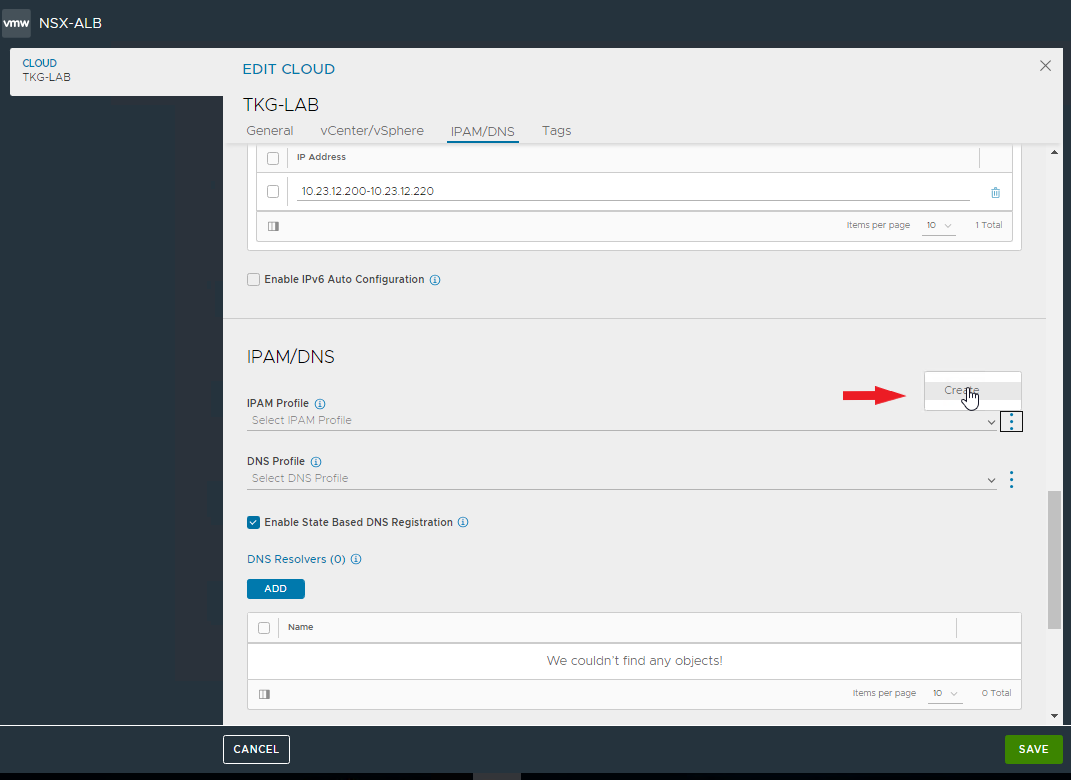

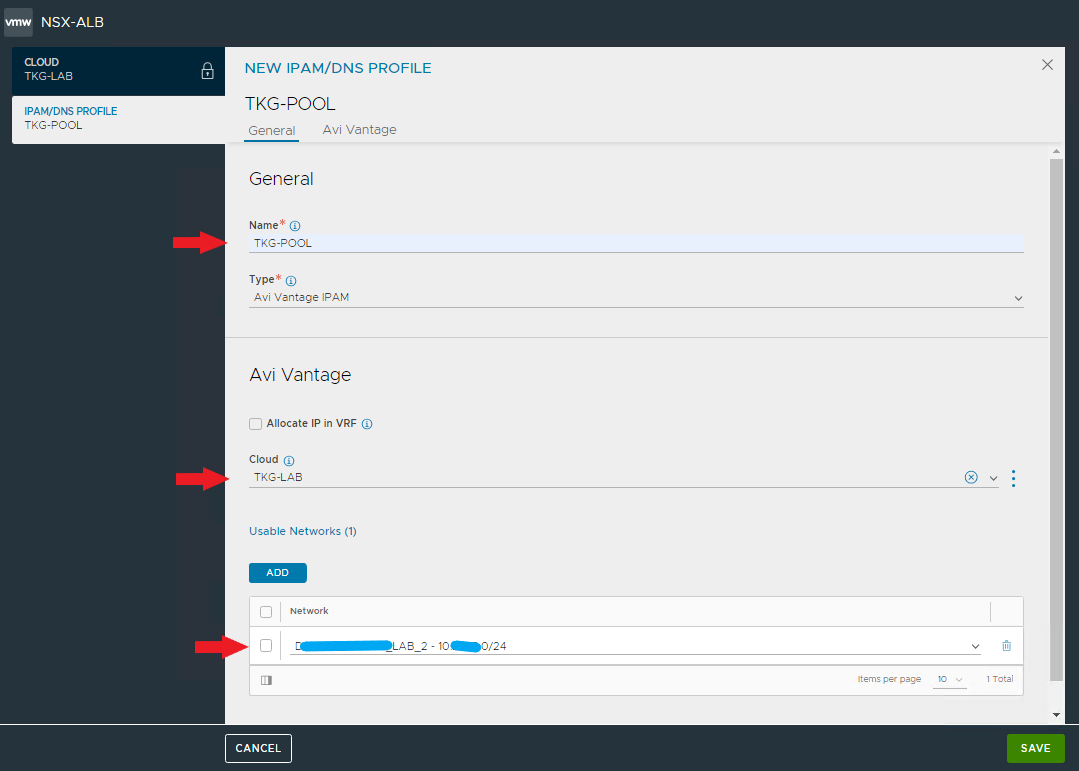

Create the IPAM profile that will be used for the VIPs required by the Tanzu clusters.

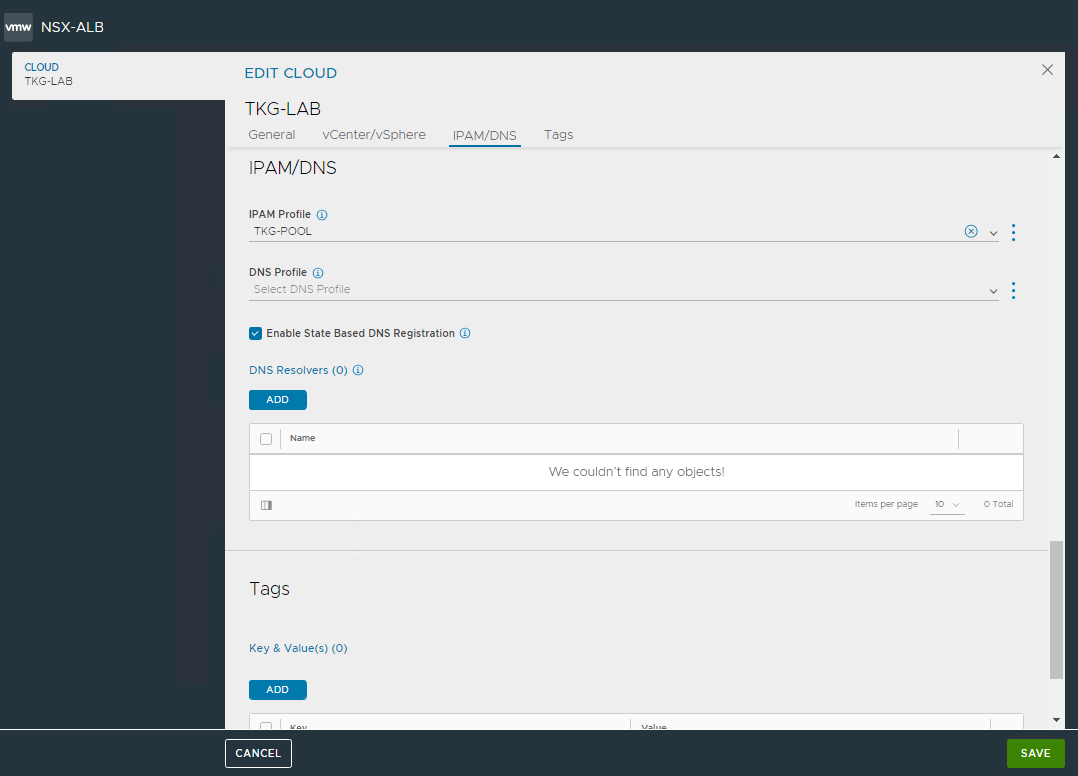

Save and return to the creation of Cloud zone. Confirm creation as well.

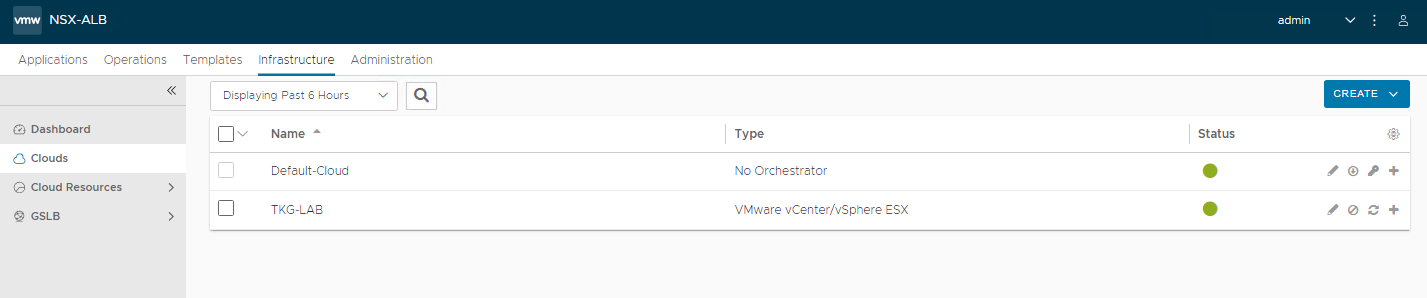

Cloud zone appears in the list, verify that it is online (green dot)

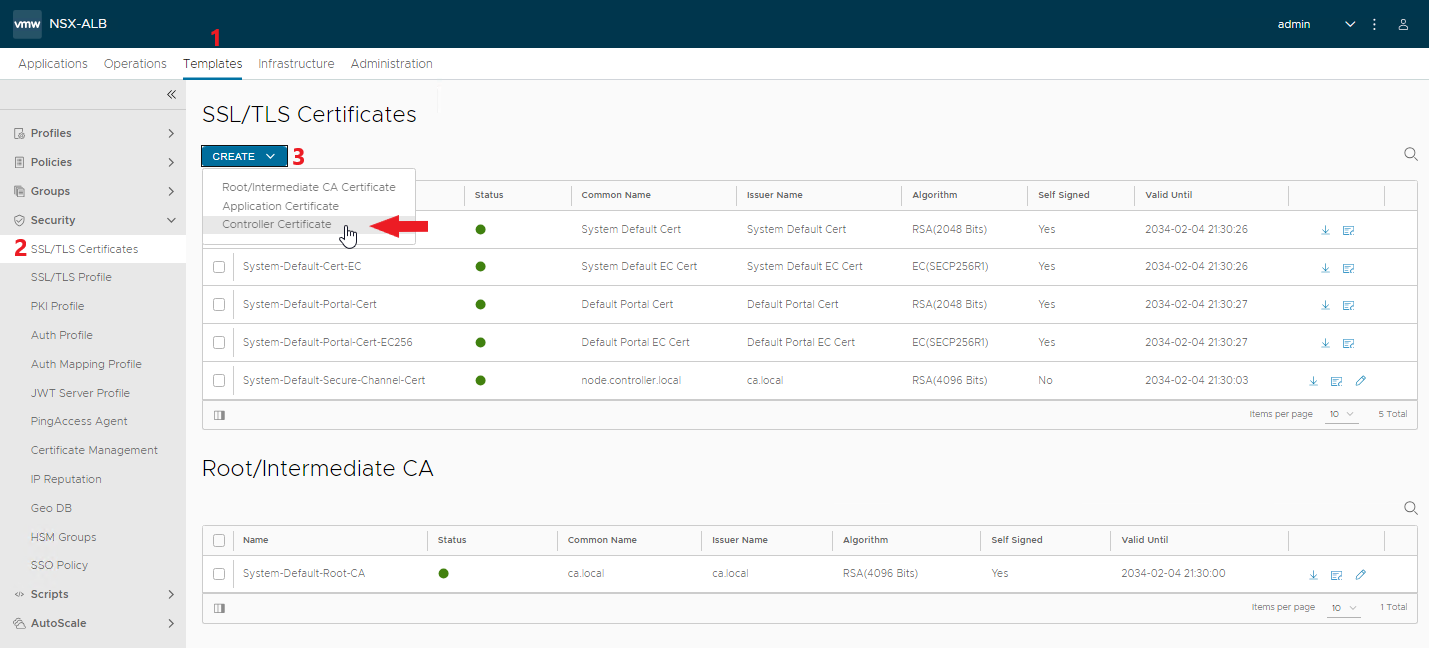

Generate a controller SSL certificate, it is the one used in the Tanzu standalone management cluster creation wizard

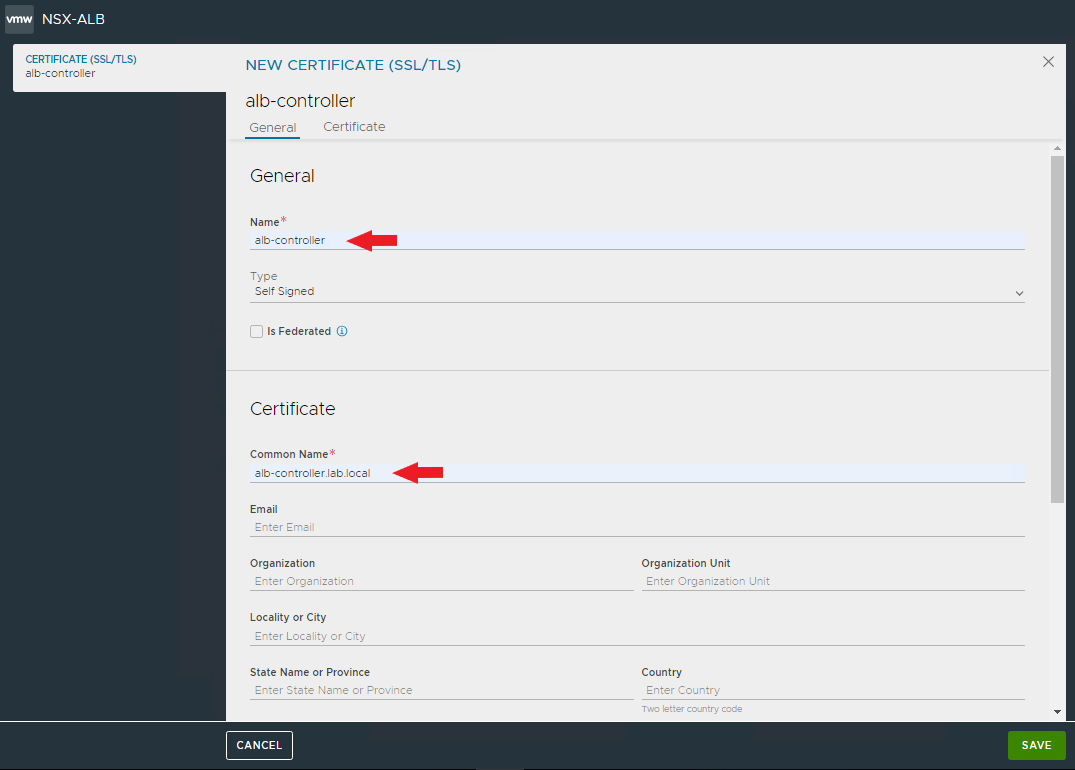

Enter the name and Common Name of the certificate, careful that it reflects the actual DNS record (FQDN) used by Tanzu to access the AVI controller. Enter all the necessary fields.

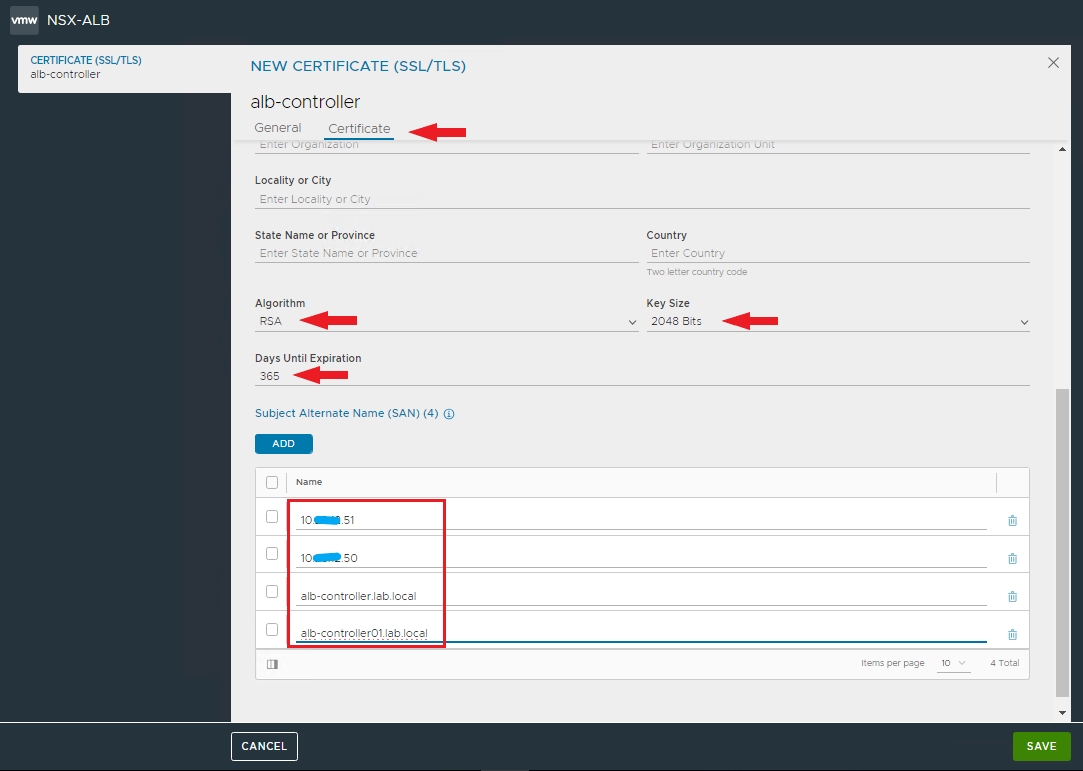

Complete the certificate with days of validity (365 or more if you want) and insert all the Subject Alternate Names with which the certificate can be invoked (IP addresses of the VIP and controllers, also include the FQDNs of the individual controllers)

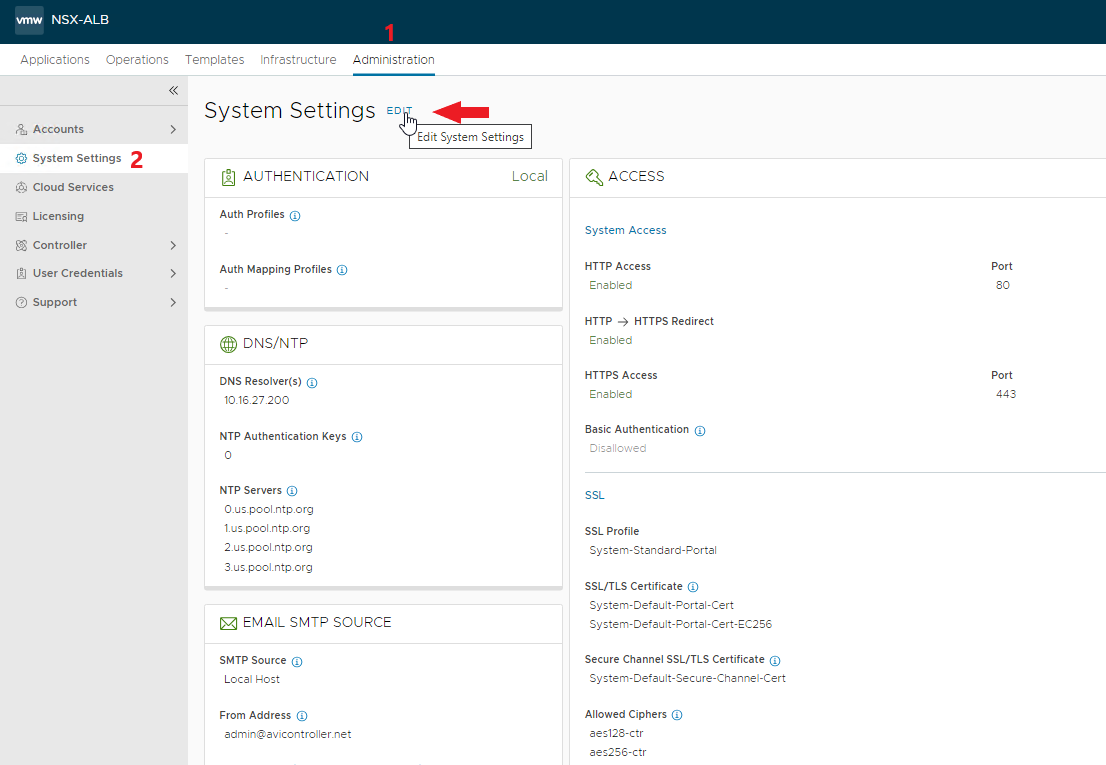

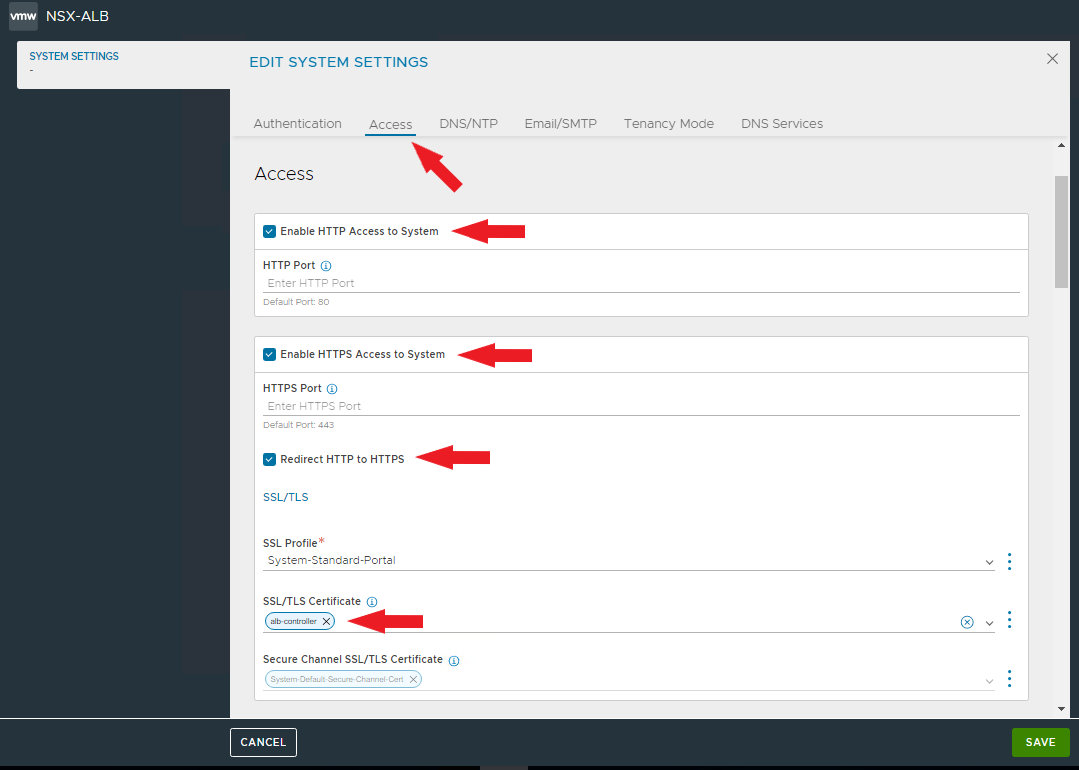

The certificate will be used to access the controller VIP, set it for access.

Enable HTTP and HTTPS access as well as redirect to HTTPS.

Delete the current certificates and select the newly created one.

NOTE: applied the new certificate will need to reconnect to the controller

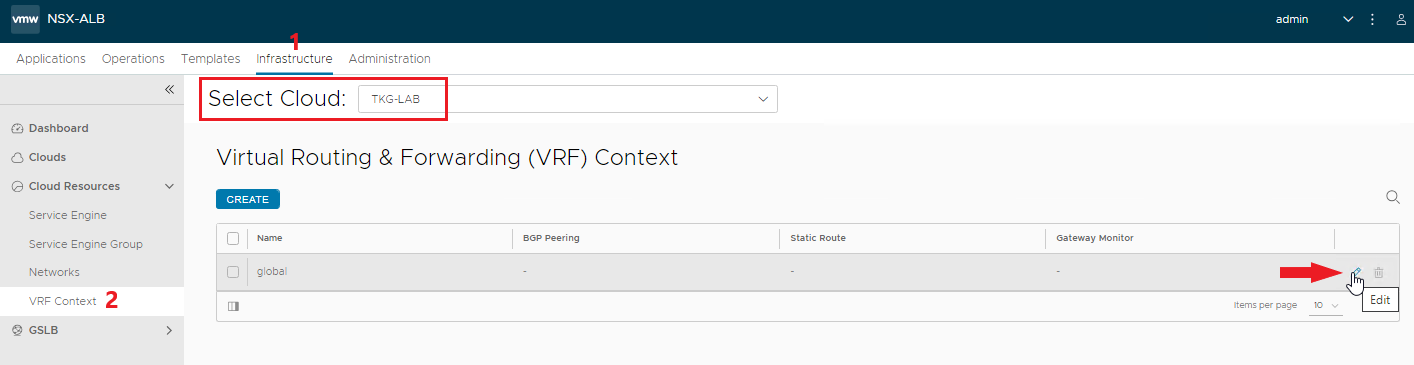

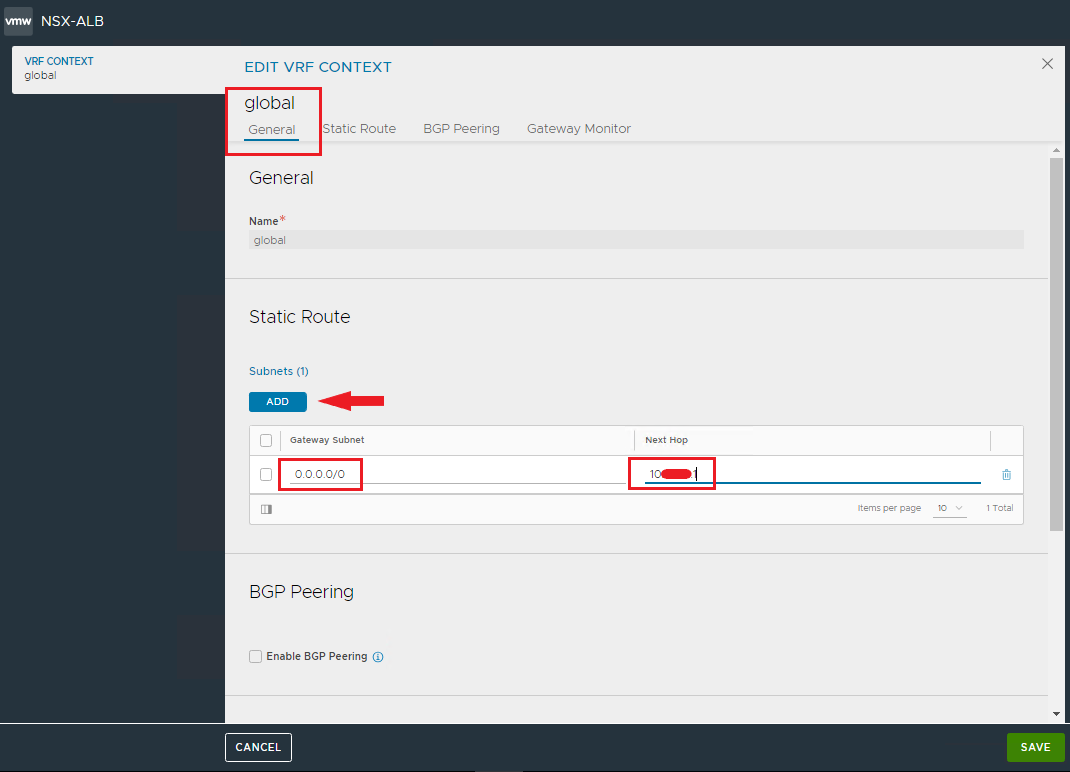

It remains to enter the default route for traffic leaving the VRF associated with our Cloud zone.

NOTE: verify that our Cloud zone is selected in the Select Cloud field.

Enter the default GW for all outgoing traffic

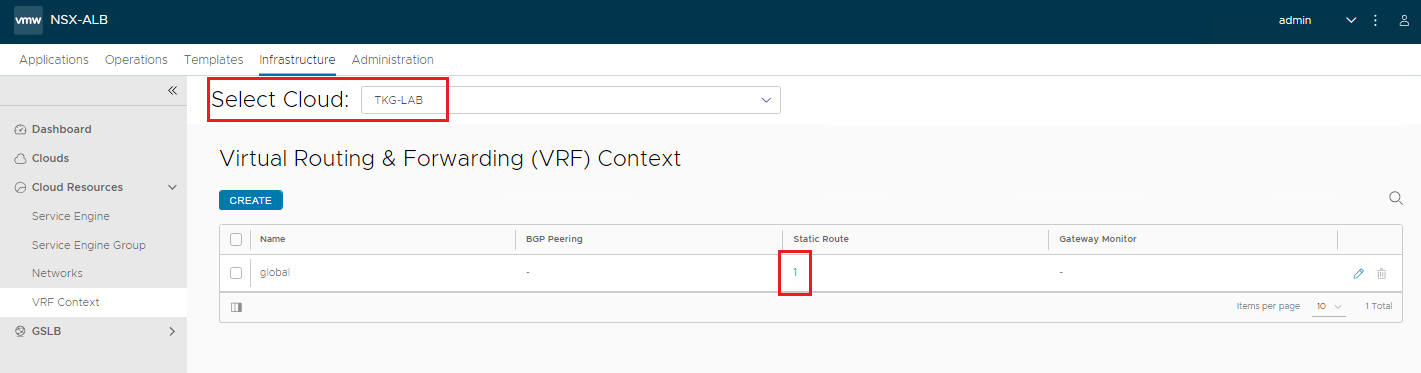

Check that the route is present

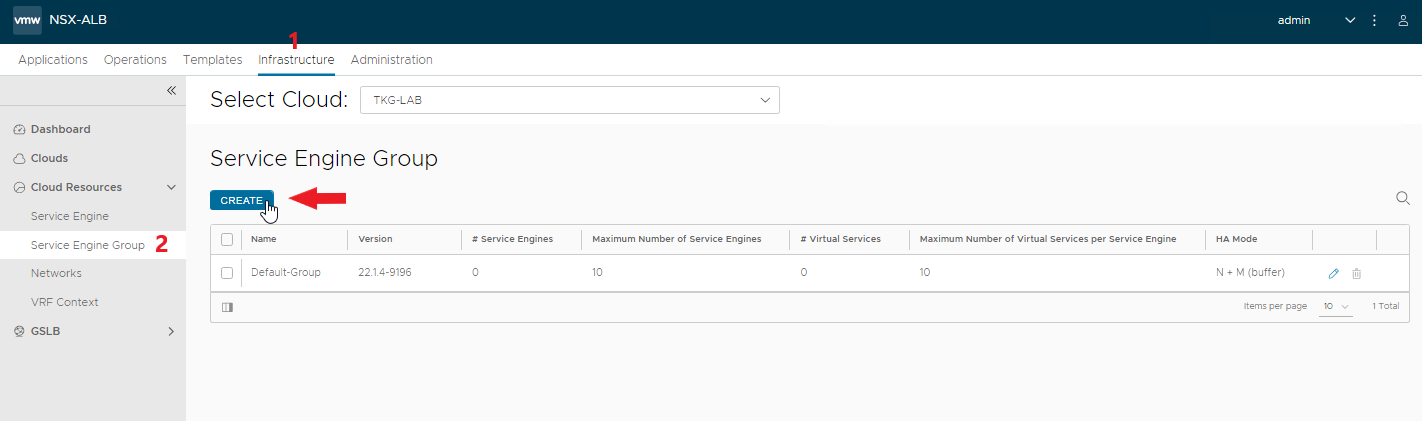

Complete our configuration by creating a Service Engine Group to be used for Tanzu. This will allow us to customize the configurations of the SEs used for Tanzu.

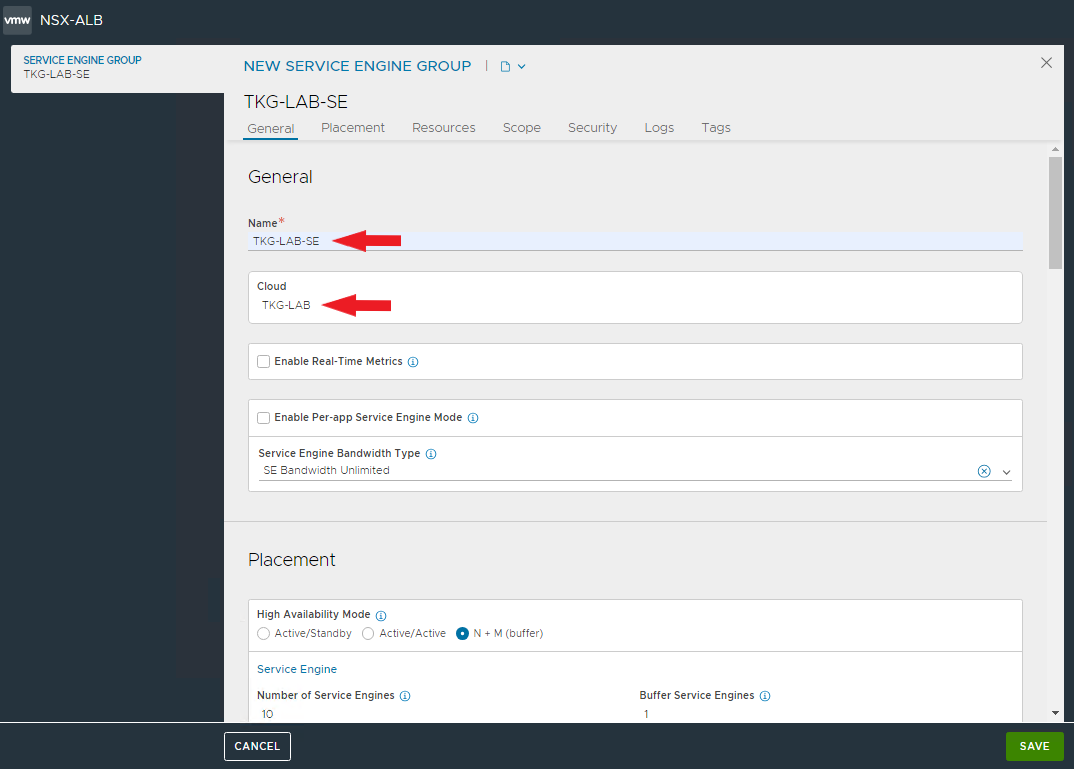

Enter the name and select the cloud zone.

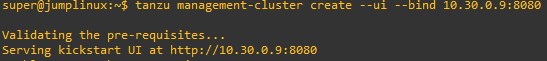

Finally we have an ALB loadbalancer ready to serve our Tanzu clusters 🙂